The AB Testing JavaScript that powers tests is powerful, but can lead to many unintended consequences.

Conversion Sciences offers a pretty amazing ability to our clients: A completely “turnkey” conversion optimization testing service. By “turnkey” we mean that our clients don’t need to do anything to their website in order for us to analyze the site, design creative, develop code, QA, test, review and route traffic to winners.

Why is this? Don’t we need new pages designed? Interactions with IT? Release schedules? Sprints?

The reason we have this “phenomenal cosmic power” is that our AB testing tools take control of each visitor’s browser. The changes are made on the visitors’ devices, so the website doesn’t have to be modified.

Well, not until we’ve verified that a change brings in big money.

While this makes us feel all high and mighty, it comes with a big drawback.

The Magic and Mania of JavaScript

All of the major browsers have a scripting engine built into them. It allows programs to be run inside the browser. The programming language used is called JavaScript. It’s JavaScript that makes websites interactive. Website developers use JavaScript to make text accordion when you click a heading. It is used to rotate images in a carousel.

Unfortunately, developers use JavaScript to do silly things, like parallax animations.

This unnecessary “parallax” motion may be reducing the conversion rates for this site.

And then there’s this.

Don’t use JavaScript animations just because you can.

Yes, JavaScript gives us the power to make our websites harder to use.

JavaScript is Used in AB Testing Software

Our developers use JavaScript to modify a website when you visit it. The AB testing software “injects” our JavaScript into the browser when the page is loaded.

First the web page is loaded as is from the webserver. Some visitors will see only this default version of the website.

For some visitors, our JavaScript is then injected and executed. The AB Testing software determines who sees the default web page and who will see a variation of the page.

Phenomenal Cosmic AB Testing Power

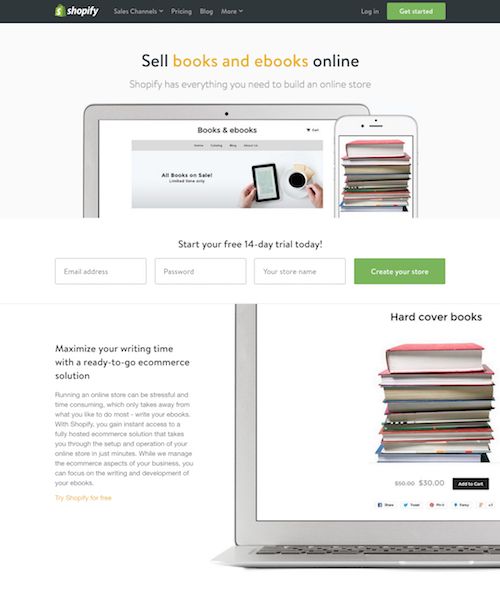

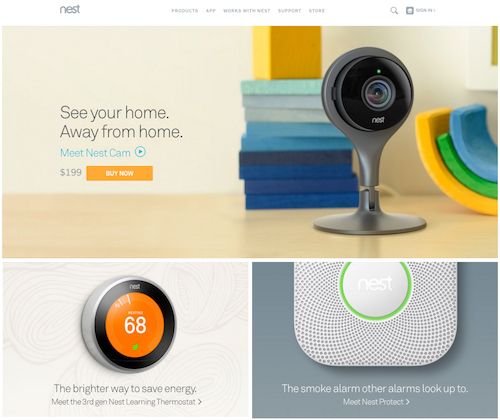

We can change almost anything about a page using AB Testing JavaScript.

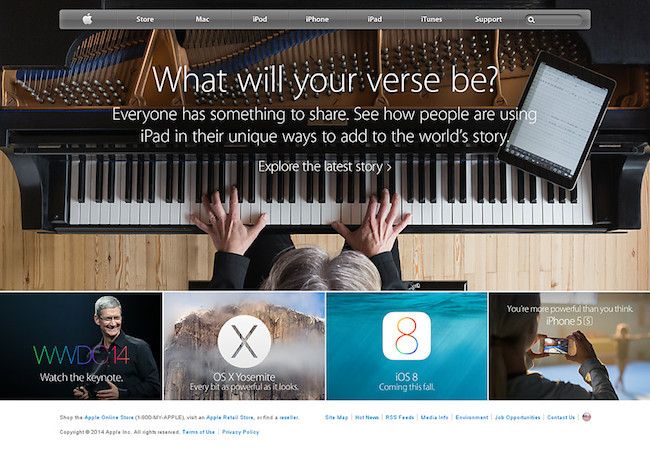

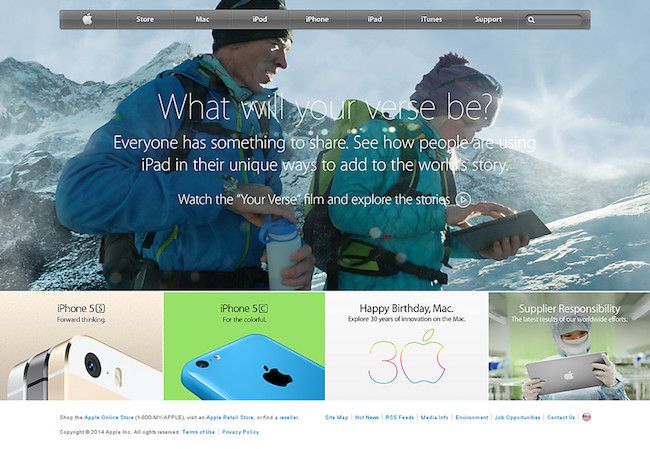

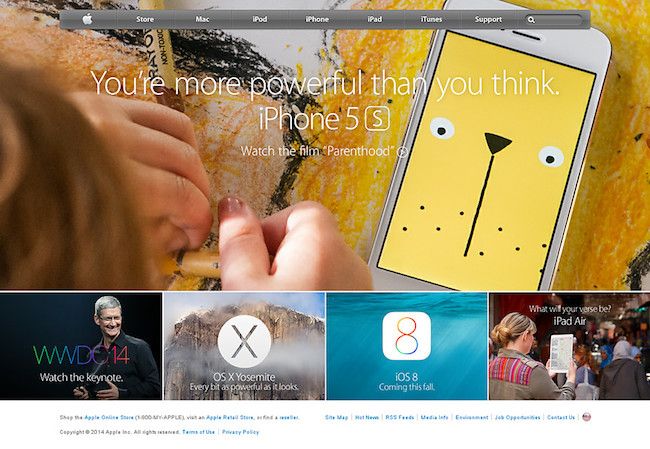

We change the headline to something different.

We change the order of a rotating carousel, or slider.

We hide elements…

and insert them as well.

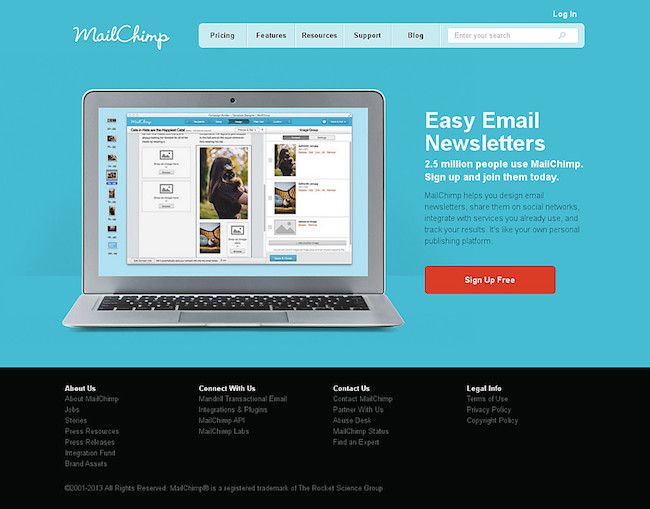

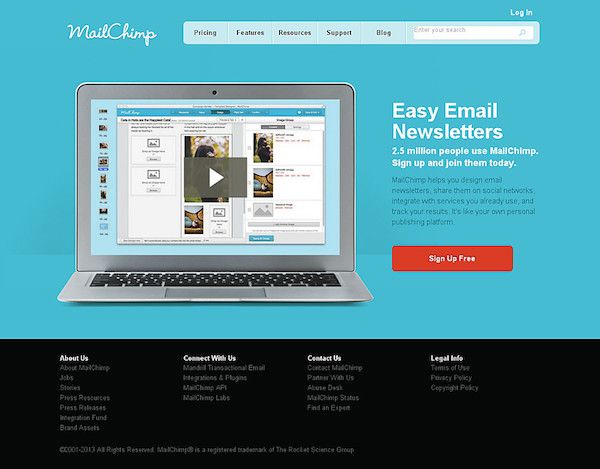

We insert video.

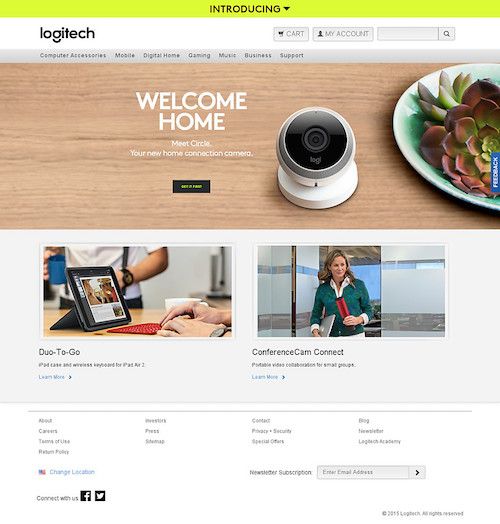

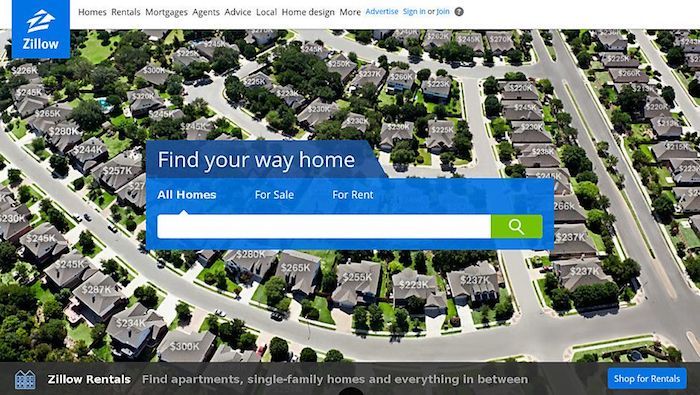

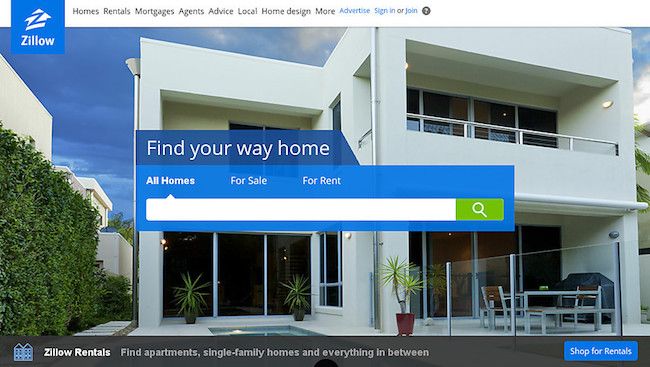

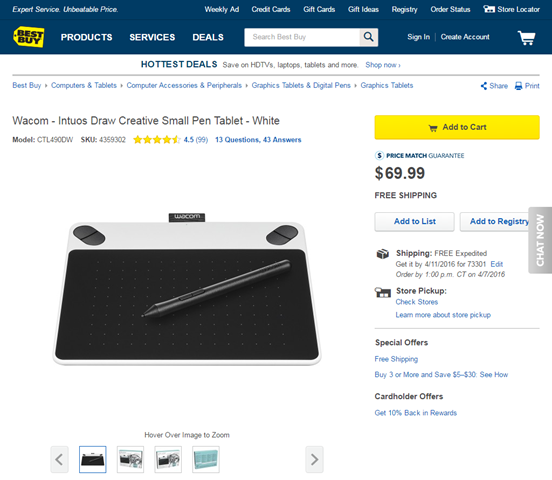

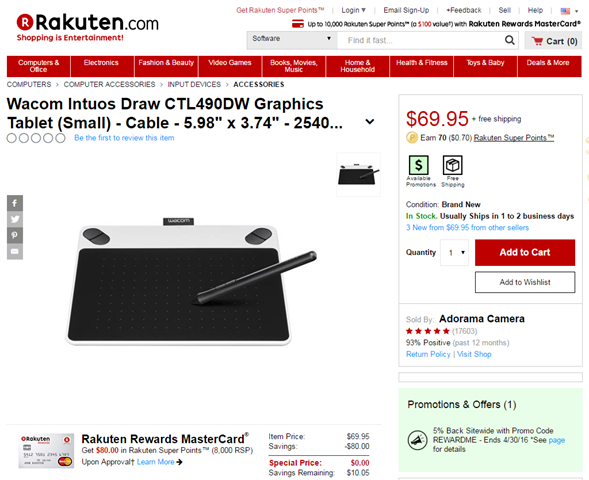

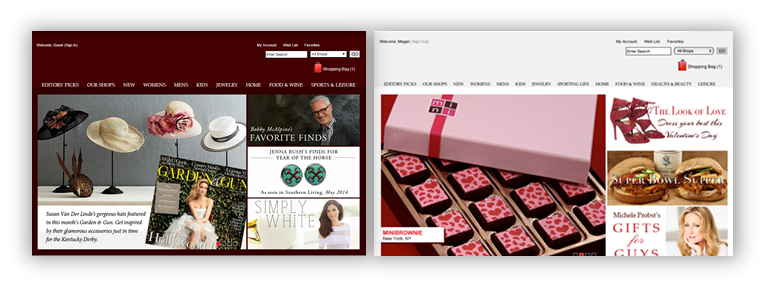

We completely change the look of a website.

We completely change the look and feel of this site. The test showed the white site performed the same as the brown site.

AB Testing JavaScript is a Step Toward Personalization

If we wanted, we could deliver a different website to every visitor who arrives. If you’re thinking “personalization” at this point, then you have an idea of where our industry is heading.

AB testing produces the data that makes personalization truly work.

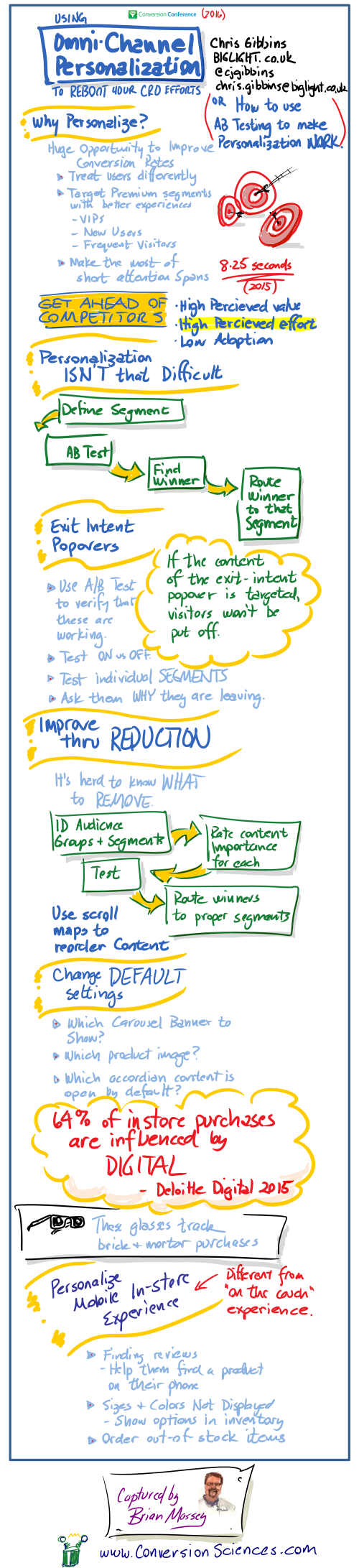

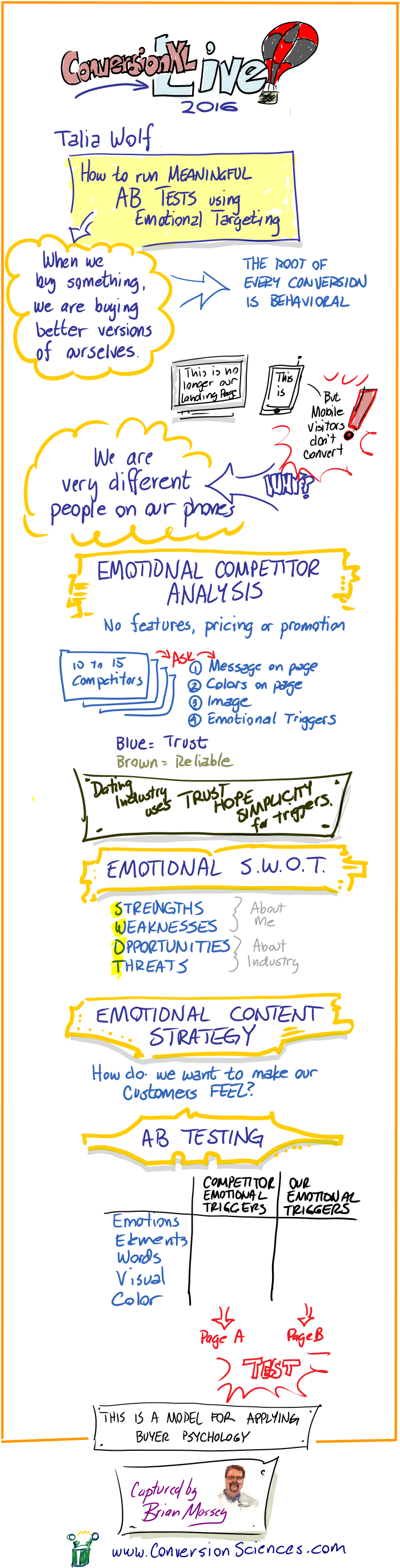

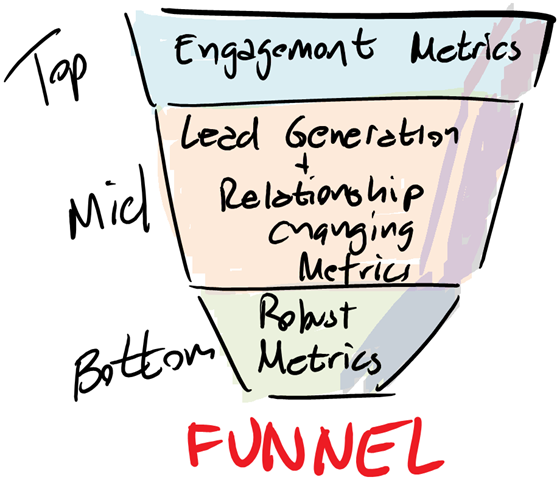

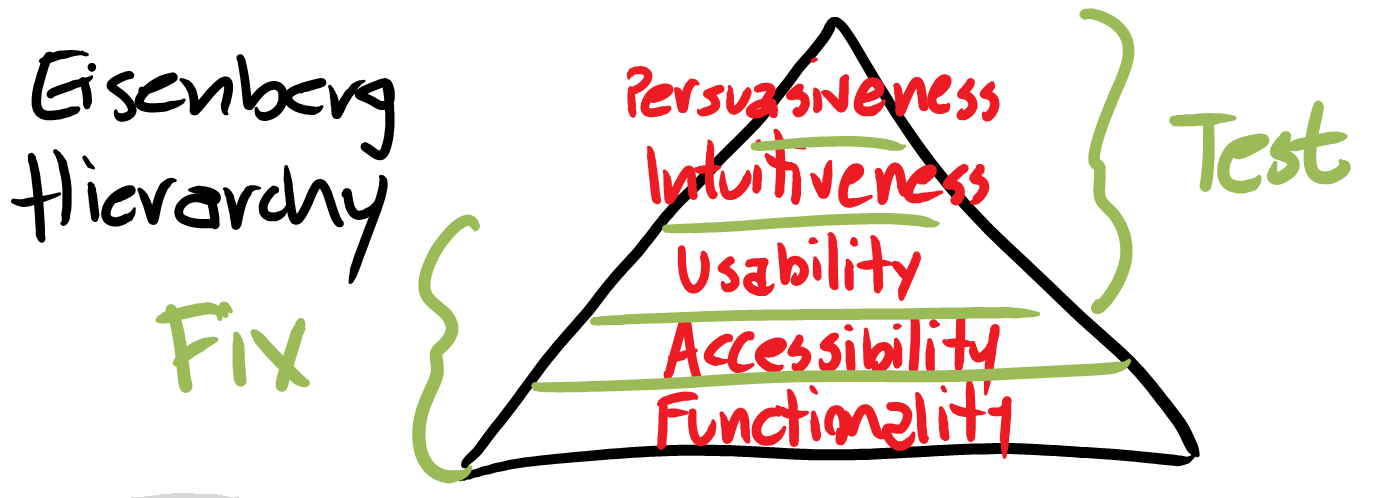

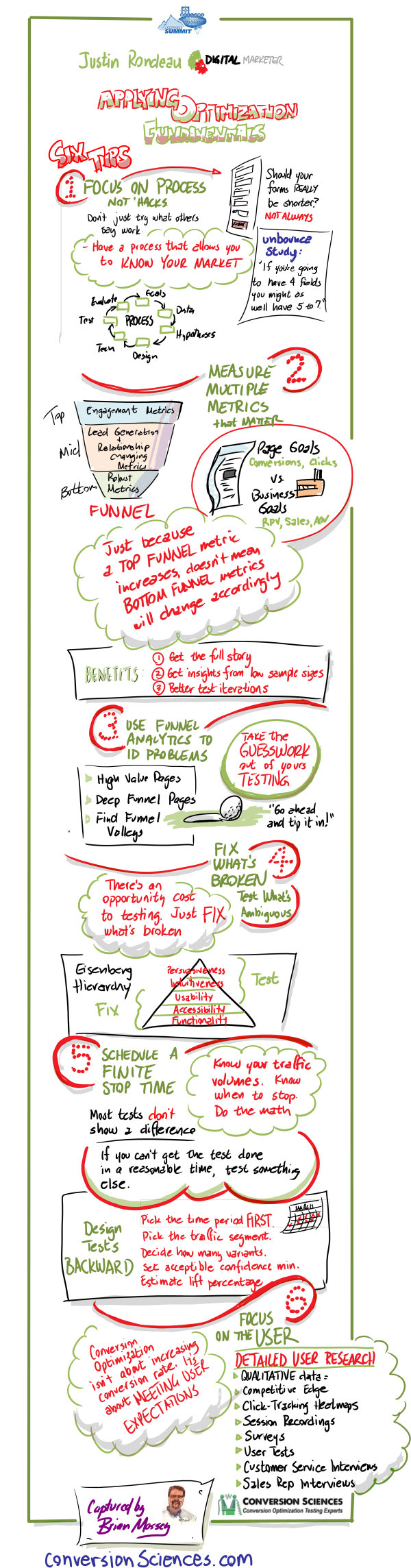

Here are my instagraphic notes from a presentation by Chris Gibbins at Conversion Conference 2016 on using AB testing and personalization.

AB Testing Flicker, Flash, Blink and Ghosting

Unfortunately, JavaScript can introduce an error into our AB tests. Because we always load the website “as it is” on the server first, there is a possibility that the visitor will see the original for a fraction of a second before our changes get executed.

This has been called a “flash”, “flicker”, and a “blink”. It can have a significant effect on test results.

With AB Testing JavaScript, Flash is not cool.

The problem with this AB testing JavaScript flicker is that it won’t be there if a change is made permanent on the site. Our tests may say the new change generated more revenue, and we’ll change the website to make that change manifest. But there will be no flicker. This means there is another variable in our test.

Was it the new headline we tested or was it the flicker that made more people buy?

The Human Eye is Drawn to Motion

Our eyes and brains have evolved to make motion important. When something moves, our eyes and brains work hard to figure out if the thing moving is edible, if it will eat us, or if we can mate with it. Technology has moved faster than evolution. Even though we’re looking at a website, where there is no immediate source of food, fear or fornication, our lizard brains continue to favor motion.

Imagine this scenario. We are testing the text on a button. That means that for some of our visitors, the button will change. Others will see the default button text. Then we’ll see who buys the most.

If there is a flicker, flash or blink when the text changes, the button will be immediately more visible to those visitors who see it. More of them will see the call to action. This may make more of them consider buying than those who simply scrolled past. If this new treatment generates more revenue, we are left with the question, “Was it the text or was it the motion that created the lift in sales?”

We won’t know until we push the new text onto the server and do a post-rollout analysis to see the real lift. At this point, we may find that the text didn’t increase purchases. It’s a real bitch.

How many buttons have been change because of flicker?

AB Testing Software Tries to Eliminate Blinking

The AB testing software that we use works to eliminate this blinking and flashing. The issue is so important that Convert Insights has patented their method for eliminating “blink”.

AB testing software like Optimizely, Visual Website Optimizer, Adobe Test, and Marketizator, load asynchronously, meaning that they load our changes as the page loads. This makes it easier for changes to be made before the visitor sees the page.

Eliminating Flash Flicker and Blink in AB Tests

In addition to this, our developers can do things that reduce and eliminate flicker and blink. Every test you do has different issues, and so a variety of tactics can be used to address them.

Avoid Google Tag Manager

Don’t use a Tag Manager like Google Tag Manager to serve your AB testing JavaScript software tags. Add them to the page manually. Tag managers can counteract the asynchronous loading of the tool and delay when changes can be made.

Make Changes with CSS

If the change can be made with the cascading style sheets (CSS), we favor making changes with CSS over using JavaScript. CSS can make changes to an element – like an image or font – that can be applied before an element displays.

Modal Dialogs and Overlays

Modal dialogs usually don’t display until the visitor takes an action. They can be edited before they are shown without flashing.

Use a Timer for DOM Changes

All of the images, headings, copy, widgets, and forms are stored in the browser in a big database called the DOM (Document Object Model). When JavaScript makes changes to the DOM, the content on the page changes. The DOM is slow-loading, as you can imagine.

Our developers will set a timer in JavaScript to check for when a changing element is loaded. By watching for our element to be loaded, we can execute a change it before the DOM – and the page – is loaded.

For the AB Testing Software to Load Our Changes Immediately

The AB testing software provides a synchronous loading mode. Optimizely and VWO use different approaches for this.

Rethink the Test

Sometimes, we have to go back to the drawing board and design a test that doesn’t cause flash-inducing changes. We will refactor a test to eliminate items that cause a flash or flicker.

Delay Display of the Content

We can delay the display of the content until the DOM is loaded and all elements have been changed. This causes a different kind of issue, however. The visitor sees a blank page or blank section for longer if they get the page with the change.

In one case we added a spinning wheel to indicate that the page is loading.

![]()

Insert Placeholders Using Fast CSS

When inserting elements, we’ll use CSS to insert a placeholder, then use JavaScript to modify the element after the page has loaded. This reduces redrawing of the page when elements are inserted.

We created a blank box in CSS to minimize AB Testing Flash on this mobile website.

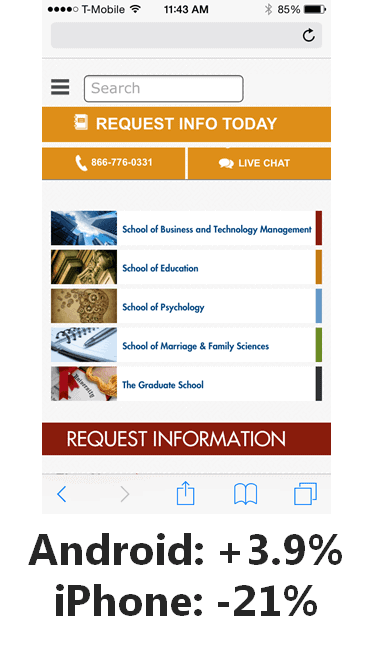

Optimizing for Mobile

Mobile pages load more slowly. This is because visitors don’t always have a high-quality connection when visiting from the festival, or from inside the walls of a bank while standing in line. For mobile devices, flash can be an even bigger issues.

Fortunately, the tactics we use on the desktop also work on mobile websites. But don’t forget to QA your test treatments on 3G and 4G connections. You may find flicker and blink on mobile devices that didn’t appear on your desktop.

Great JavaScript Developers are a Must

We spend a considerable amount of our time making sure our JavaScript and CSS changes are like the “native” implementation would look. It’s one of the things that makes testing hard. Our team has the experience to ensure your tests aren’t falling victim to flicker, flash, blink or ghosting. Best in class Developers are one of the things that help to make use the most effective Conversion Optimization Agency on the planet!

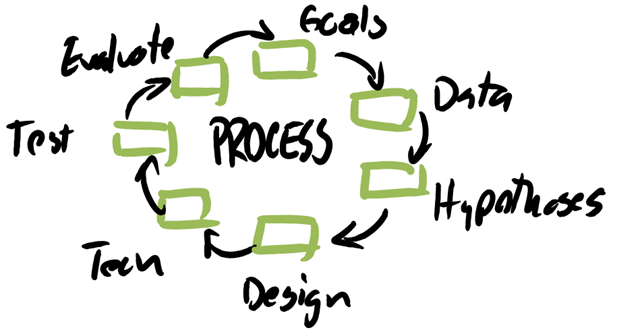

If you’d like our team to take over the effort of developing your AB tests, contact us for a free consultation and an overview of our process.