AB Testing Results are Half-Filled with Losers, and That’s a Good Thing

The law of unintended consequences states that every human endeavor will generate some result that was not, nor could have been foreseen. The law applies to hypothesis testing as well.

In fact, Brian Cugelman introduced me to an entire spectrum of outcomes that is helpful when evaluating AB testing results. Brian was talking about unleashing chemicals in the brain, and I’m applying his model to AB testing results. See my complete notes on his Conversion XL Live presentation below.

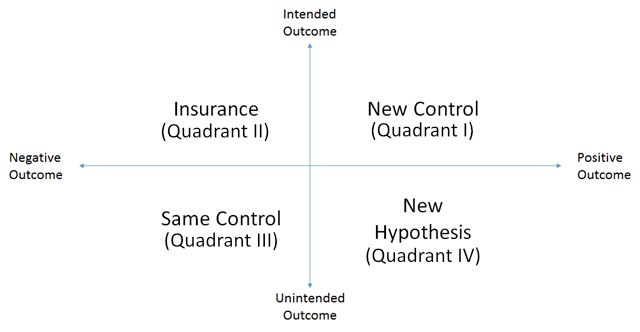

Understanding the AB Testing Results Map

In any test we conduct, we are trying everything we can to drive to a desired outcome. Unfortunately, we don’t always achieve the outcomes we want or intend. For any test, our results lie on one of two spectrums defining four general quadrants.

On one axis we ask, “Was the outcome as we intended, or was there unintended result?” On the other axis we ask, “Was it a negative or positive outcome?”

While most of our testing seeks to achieve the quadrant defined by positive, intended outcomes, each of these quadrants gives us an opportunity to move our conversion optimization program a step forward.

I. Pop the Champaign, We’ve Got a New Control

With every test, we seek to “beat” the existing control, the page or experience that is currently performing the best of all treatments we’ve tried. When our intended outcome is a positive outcome, everyone is all smiles and dancing. It’s the most fun we have in this job.

In general, we want our test outcomes to fall into this quadrant (quadrant I), but not exclusively. There is much to be learned from the other three quadrants.

II. Testing to Lose

Under what circumstances would we actually run an AB test intending to see a negative outcome? That is the question of Quadrant II. A great example of this is adding “Captcha” to a form.

CAPTCHA is an acronym for “Completely Automated Public Turing test to tell Computers and Humans Apart”. We believe it should be called, “Get Our Prospects to Do Our Spam Management For Us”, or “GOPDOSMFU”. Businesses don’t like to get spam. It clogs their prospect inboxes, wastes the time of sales people and clouds their analytics.

However, we don’t believe that the answer is to make our potential customers take an IQ test before submitting a form.

These tools inevitably reduce form completion rates, and not just for spam bots.

So, if a business wants to add Captcha to a form, we recommend understanding the hidden costs of doing so. We’ll design a test with and without the Captcha, fully expecting a negative outcome. The goal is to understand how big the negative impact is. Usually, it’s too big.

In other situations, a design feature that is brand oriented may be proposed. Often a design decision that enhances the company brand will have a negative impact on conversion and revenue. Nonetheless, we will test to see how big the negative impact is. If it’s reasonable, then the loss of revenue is seen as a marketing expense. In other words, we expect the loss of short-term revenue to offset long term revenue from a stronger brand message.

These tests are like insurance policies. We do them to understand the cost of decisions that fall outside of our narrow focus on website results. The question is not, “Is the outcome negative?” The question is, “How negative is the outcome?”

III. Losers Rule Statistically

Linus Pauling once said, “You can’t have good ideas without having lots of ideas.” What is implied in this statement is that most ideas are crap. Just because we call them test hypotheses doesn’t mean that they are any more valuable than rolls of the dice.

When we start a conversion optimization process, we generate a lot of ideas. Since we’re brilliant, experienced, and wear lab coats, we brag that only half of these ideas will be losers for any client. Fully half won’t increase the performance of the site, and many will make things worse.

Most of these fall into the quadrant of unintended negative outcomes. The control has won. Record what we learned and move on.

There is a lot to be learned from failed tests. Note that we call them “inconclusive” tests as this sounds better than “failed”.

If the losing treatment reduced conversion and revenue, then you learn something about what your visitors don’t like.

Just like a successful test, you must ask the question, “Why?”.

Why didn’t they like our new background video? Was it offensive? Did it load too slowly? Did it distract them from our message?

Take a moment and ask, “Why,” even when your control wins.

IV. That Wasn’t Expected, But We’ll Take the Credit

Automatic.com was seeking a very specific outcome when we designed a new home page for them: more sales of the adapter and app that connects a smartphone to the car’s electronic brain. The redesign did achieve that goal. However, there was another unintended result. There was an increase in the number of people buying multiple adapters rather than just one.

We weren’t testing to increase average order value in this case. It happened nonetheless. We might have missed it if we didn’t instinctively calculate average order value when looking at the data. Other unintended consequences may be harder to find.

This outcome usually spawns new hypotheses. What was it about our new home page design that made more buyers decide to get an adapter for all of their cars? Did we discover a new segment, the segment of visitors that have more than one car?

These questions all beg for more research and quite possibly more testing.

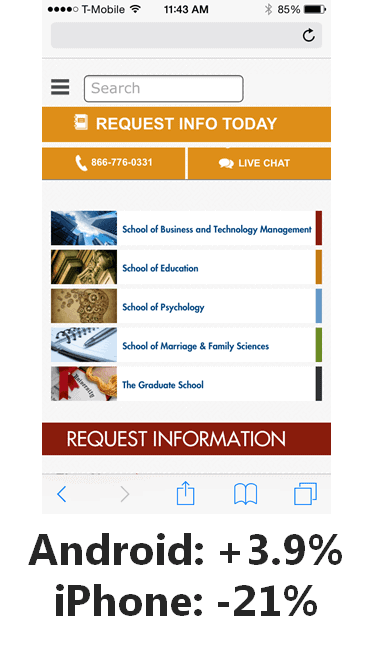

When Outcomes are Mixed

There is rarely one answer for any test we perform. Because we have to create statistically valid sample sizes, we throw together some very different groups of visitors. For example, we regularly see a difference in conversion rates between visitors using the Safari browser and those using Firefox. On mobile, we see different results when we look only at visitors coming on an Android than when we look at those using Apple’s iOS.

In short, you need to spend some time looking at your test results to ensure that you don’t have offsetting outcomes.

The Motivational Chemistry and the Science of Persuasion

Here are my notes from Brian Cugelman’s presentation that inspired this approach to AB testing results. He deals a lot with the science of persuasion.

My favorite conclusions are:

“You will get more mileage from ANTICIPATION than from actual rewards.”

“Flattery will get you everywhere.”

I hope this infographic generates some dopamine for you, and your new found intelligence will produce seratonin during your next social engagement.

- How to Pick a Conversion Optimization Consultant for your Online Business - April 18, 2024

- Conversion Rate Optimization for SaaS Companies - March 25, 2024

- SaaS Website Best Practices for Conversion Optimization - March 25, 2024

Trackbacks & Pingbacks

[…] Source https://conversionsciences.com/blog/hypothesis-testing-half-filled-with-losers/?utm_medium=feed&u… […]

Leave a Reply

Want to join the discussion?Feel free to contribute!