Can you send a daily email to a business-to-business email list? How often can I email my B2B list? Check out these 4 lessons learned.

One of my favorite conversion strategies is the second chance. The second chance only comes when I have a way to continue the conversation; to get someone to come back again and let me make my case again.

There is no better second chance channel than email.

When entrusted with an email address, and permission to continue the conversation, I have one, two, three or more chances to persuade a prospect to reconsider.

In a business-to-business situation — the considered purchase — in which a decision will be made over a period of weeks or months, email is a true friend. And if it is executed with respect, it is a friend to those struggling with a purchase decision.

The question is, how many second chances am I going to take?

Five Emails an Hour

I tell companies that they can send email as often as their content allows them.

I once got five emails from American Airlines within the space of an hour. Did I unsubscribe? Did I feel spammed? The emails were telling me the status of a flight I was booked on as its departure time and gate changed. The emails were completely relevant to my situation, and were welcome.

If we were to stand by our statement that businesses can send as often as their emails’ relevance allows, we need to understand the dynamics of a high-frequency email campaign.

How Often Can I Email my B2B List: An Email a Day Experiment

The goal of this experiment was to examine the following hypotheses:

- Sending email would outperform social media marketing.

- Sending frequent email would significantly increase my conversion rate.

- Sending frequently would cause an unacceptable number of my subscribers to unsubscribe.

- Sending frequent email would reduce my ability to deliver email due to spam reports.

a. The List

We chose a selection of 2000 names from my house list. This list consists of contacts made through personal interactions, meetings and consultations. It is primarily a business-to-business email list.

I would call the list a “semi-warm” list having received email from me only quarterly. This list had received emails on January 11 and April 30. The experiment began September 7.

Your list could easily be generated from social media traffic or search engine traffic.

b. The Content

Because of the frequent nature of these emails, it was important that they provide some value and be entertaining. This proved to be a significant challenge.

Each email followed the following formula:

- A non-promotional subject line

- Relevant copy

- Link to relevant content online or registration for a live event

- Offers varied, including an invitation to subscribe to my mailing list, registration for a live workshop and an invitation to a Webinar on writing for landing pages.

Subject lines included “Are you the victim of the Email Invisibility Ray?,” “Social Media: Marketing from my La-Z-Boy,” and “Why eight-year-olds beat me at Chess.”

3. The Frequency

Emails were sent daily, Tuesday through Friday for two consecutive weeks. Eight emails we sent in all.

High Frequency Email Campaign Test Results

1. Email Performance vs. Social Media

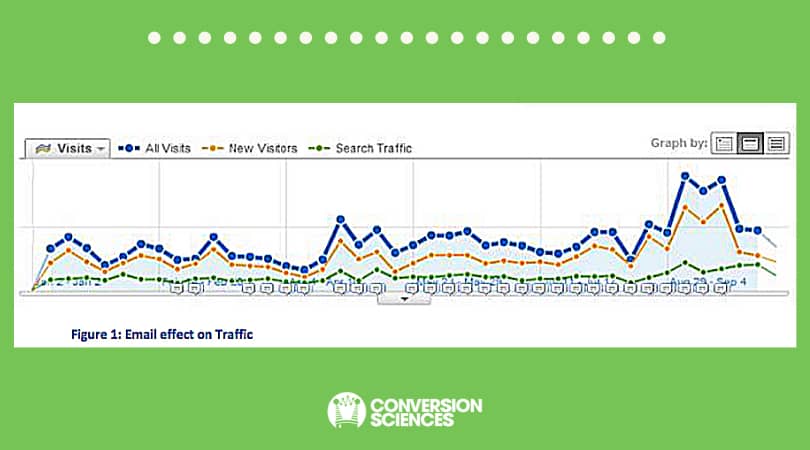

We’ve had relatively good luck using social media to drive traffic to my site. However, in Figure 1, you can see that the email resulted in significant increases in traffic, even outperforming our summer social media experiment.

Hypothesis: “Sending email would outperform social media marketing.” True

One interesting note is the rise in search engine traffic at the time of the email. This underscores that click-through rate is only a partial measurement of email effectiveness.

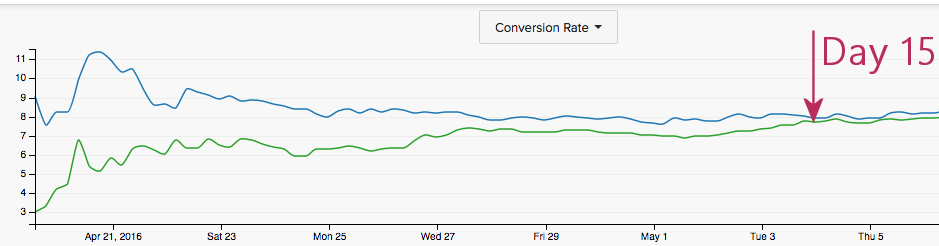

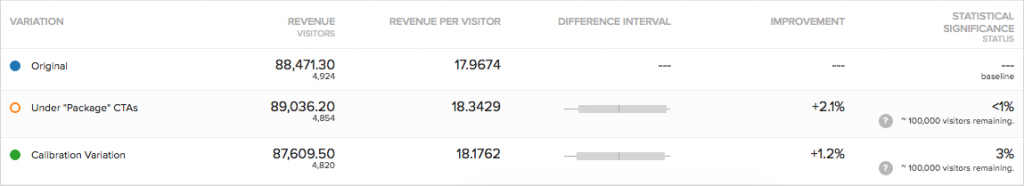

2. Increased Conversion Rate

It is probably not surprising that sending email to a targeted list is going to result in more conversions. However, keep in mind that my social media networks are also quite well-targeted.

As expected, both conversions and conversion rates for new subscribers increased. We can also attribute thirteen (13) workshop registrations to this email series, generating almost $1300 in sales.

Just looking at new email subscribers, the conversion rate for our social media experiment were 2.5%. For the period of this email, conversion rates were 7.6%.

Hypothesis: “Sending frequent email would significantly increase my conversion rate.” True

3. Opt-out Rates

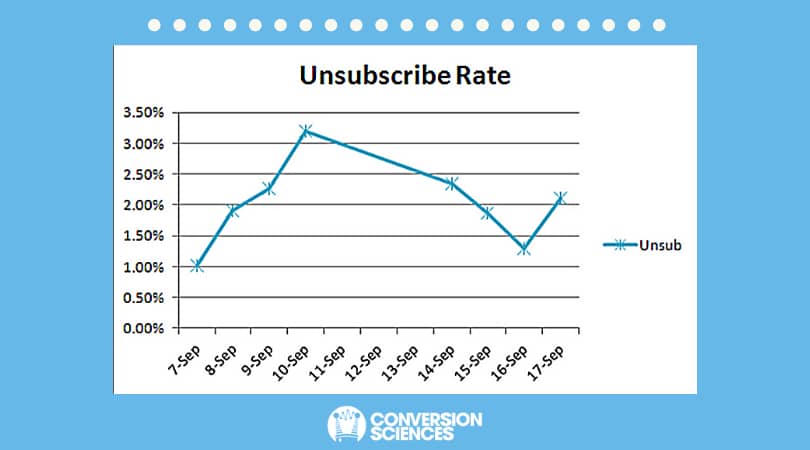

This was the metric I was most interested in examining. How would unsubscribe rates change over the course of the experiment?

I consider an unsubscribe rate of 1% or less acceptable and expected in any email that asks the reader to take action. So, I got pretty nervous as unsubscribe rates rose to 3.2%, and stayed well above 1%. Over the course of the experiment, 15% of the list unsubscribed.

There are two ways to look at this:

- We lost 15% of our prospects.

- We identified the 85% of list members that are interested and qualified.

If my goal with this list was primarily to sell, I would consider the 15% loss to be acceptable and even desirable. This is called Shaping your list.

However, my goal is to evangelize conversion and to educate, so the opt-outs represents a pretty significant loss of reach.

From a brand perspective, there were very few negative comments, and many positive ones.

Given the opt-out rates, would I do this again. The answer is a resounding yes.

Hypothesis: “Sending frequently would cause an unacceptable number of my subscribers to unsubscribe.” False

4. The Effect on Deliverability

The other negative effect that frequent emails can have is an increase in spam reports.

For most service providers, deliverability is the inverse of the bounce rate. If my emails are reported as spam, we would see an increase in bounces. Intuitively, when shaping a list, you expect bounce rates to drop quickly as bouncing addresses are removed from the list.

For our experiment, the bounce rate began at 2.5% but quickly dropped, leveling at an imperceptible 0.06%.

One reader was kind enough to let me know that they had “spammed” my email. I used the site MXToolbox.com to see if my domain had been placed on any black lists. However, it would be our Email Service Provider (ESP) that took the hit if spam was reported. This is one big value of an ESP. They keep themselves – and you – off of black lists.

Another measure of reader interest is open rates.

Email service providers count the number of times a special image is downloaded to establish open rates. Since many people have images turned off in their email client, the open rate is not an accurate measure of actual opens.

However, I would interpret a steady drop in open rates as a sign that the list is becoming fatigued with my communications. Open rate can also be a good indicator of the quality of your subject line.

Open rates were relatively flat, dropping on Fridays.

Overall, I believe that few of my readers reported these emails as spam.

I attribute this positive outcome to the non-promotional nature of the copy, even though the emails were clearly promoting our email list, workshop and webinar.

Hypothesis: “Sending frequent email would reduce my ability to deliver email due to spam reports.” False

How Often Can I Email my B2B List Conclusions

With some simple analytics in place, we can pretty easily establish the ideal frequency of our email campaigns. Based on these results, we should be sending email more frequently. You will probably come to the same result. However, we tested a certain kind of email with this experiment; an email that is informational and entertaining as well as promotional. This style of email requires a bit more work and creativity on our part.

The payoff is quite clear.

Email is a more effective channel in a B2B sale than is social media. It is also a great way to get more out of your search engine and advertising traffic. When you get an email address, you get a second chance at the sale. And a third, fourth and fifth chance.

For the complete content of the emails sent during this experiment, and the results of some split tests conducted, visit.