The AB test results had come in, and the result was inconclusive. The Conversion Sciences team was disappointed. They thought the change would increase revenue. What they didn’t know what that the top-level results were lying.

While we can learn something from inconclusive tests, it’s the winners that we love. Winners increase revenue, and that feels good.

The team looked closer at our results. When a test concludes, we analyze the results in analytics to see if there is any more we can learn. We call this post-test analysis.

Isolating the segment of traffic that saw test variation A, it was clear that one browser had under-performed the others: Internet Explorer.

Performance of Variation A. Internet Explorer visitors significantly under-performed the other three popular browsers.

The visitors coming on Internet Explorer were converting at less than half the average of the other browsers and generating one-third the revenue per session. This was not true of the Control. Something was wrong with this test variation. Despite a vigorous QA effort that included all popular browsers, an error had been introduced into the test code.

Analysis showed that correcting this would deliver a 13% increase in conversion rate and 19% increase in per session value. And we would have a winning test after all.

Conversion Sciences has a rigorous QA process to ensure that errors like this are very rare, but they happen. And they may be happening to you.

Post-test analysis keeps us from making bad decisions when the unexpected rears its ugly head. Here’s a primer on how conversion experts ensure they are making the right decisions by doing post-test analysis.

Did Any Of Our Test Variations Win?

The first question that will be on our lips is, “Did any of our variations win?”

There are two possible outcomes when we examine the results of an AB test.

The test was inconclusive. None of the alternatives beat the control. The null hypotheses was not disproven.

One or more of the treatments beat the control in a statistically significant way.

Joel Harvey of Conversion Sciences describes his process below:

“Post-test analysis” is sort of a misnomer. A lot of analytics happens in the initial setup and throughout full ab testing process. The “post-test” insights derived from one batch of tests is the “pre-test” analytics for the next batch, and the best way to have good goals for that next batch of tests is to set the right goals during your previous split tests.

That said, when you look at the results of an AB testing round, the first thing you need to look at is whether the test was a loser, a winner, or inconclusive.

Verify that the winners were indeed winners. Look at all the core criteria: statistical significance, p-value, test length, delta size, etc. If it checks out, then the next step is to show it to 100% of traffic and look for that real-world conversion lift.

In a perfect world you could just roll it out for 2 weeks and wait, but usually, you are jumping right into creating new hypotheses and running new tests, so you have to find a balance.

Once we’ve identified the winners, it’s important to dive into segments.

Mobile versus non-mobile

Paid versus unpaid

Different browsers and devices

Different traffic channels

New versus returning visitors (important to setup and integrate this beforehand)

This is fairly easy to do with enterprise tools, but might require some more effort with less robust testing tools. It’s important to have a deep understanding of how tested pages performed with each segment. What’s the bounce rate? What’s the exit rate? Did we fundamentally change the way this segment is flowing through the funnel?

We want to look at this data in full, but it’s also good to remove outliers falling outside two standard deviations of the mean and re-evaluate the data.

It’s also important to pay attention to lead quality. The longer the lead cycle, the more difficult this is. In a perfect world, you can integrate the CRM, but in reality, this often doesn’t work very seamlessly.

Chris McCormick, Head of Optimisation at PRWD, describes his process:

When a test concludes, we always use the testing tool as a guide but we would never hang our hat on that data. We always analyse results further within Google Analytics, as this is the purest form of data.

For any test, we always set out at the start what our ‘primary success metrics’ are. These are what we look to identify first via GA and what we communicate as a priority to the client. Once we have a high level understanding of how the test has performed, we start to dig below the surface to understand if there are any patterns or trends occurring. Examples of this would be: the day of the week, different product sets, new vs returning users, desktop vs mobile etc.

We always look to report on a rough ROI figure for any test we deliver, too. In most cases, I would look to do this based on taking data from the previous 12 months and applying whatever the lift was to that. This is always communicated to the client as a ballpark figure i.e.: circa £50k ROI. The reason for this is that there are so many additional/external influences on a test that we can never be 100% accurate; testing is not an exact science and shouldn’t be treated as such.

Are We Making Type I or Type II errors?

In our post on AB testing statistics, we discussed type I and type II errors. We work to avoid these errors at all cost.

To avoid errors in judgement, we verify the results of our testing tool against our analytics. It is very important that our testing tool send data to our analytics package telling us which variations are seen by which segments of visitors.

Our testing tools only deliver top-level results, and we’ve seen that technical errors happen. So we can reproduce the results of our AB test using analytics data.

Did each variation get the same number of conversions? Was revenue reported accurately?

Errors are best avoided by ensuring the sample size is large enough and utilizing a proper AB testing framework. Peep Laja describes his process below:

First of all I check whether there’s enough sample size and that we can trust the outcome of the test. I check if the numbers reported by the testing tool line up with the analytics tool, both for CR (conversion rate) and RPV (revenue per visit).

In the analytics tool I try to understand how the variations changed user behavior – by looking at microconversions (cart adds, certain page visits etc) and other stats like cart value, average qty per purchase etc.

If the sample size is large enough, I want to see the results of the test across key segments (provided that the results in the segments are valid, have enough volume etc), and see if the treatments performed better/worse inside the segments. Maybe there’s a case for personalization there. The segments I look at are device split (if the test was ran across multiple device categories), new/returning, traffic source, first time buyer / repeat buyer.

How Did Key Segments Perform?

In the case of an inconclusive test, we want to look at individual segments of traffic.

For example, we have had an inconclusive test on smartphone traffic in which the Android visitors loved our variation, but iOS visitors hated it. They cancelled each other out. Yet we would have missed an important piece of information had we not looked more closely.

Visitors react differently depending on their device, browser and operating system.

Other segments that may perform differently may include:

Return visitors vs. New visitors

Chrome browsers vs. Safari browsers vs. Internet Explorer vs. …

Organic traffic vs. paid traffic vs. referral traffic

Email traffic vs. social media traffic

Buyers of premium products vs. non-premium buyers

Home page visitors vs. internal entrants

These segments will be different for each business, but provide insights that spawn new hypotheses, or even provide ways to personalize the experience.

Understanding how different segments are behaving is fundamental to good testing analysis, but it’s also important to keep the main thing the main thing, as Rich Page explains:

Avoid analysis paralysis. Don’t slice the results into too many segments or different analytics tools. You may often run into conflicting findings. Revenue should always be considered the best metric to pay attention to other than conversion rate, after all, what good is a result with a conversion lift if it doesn’t also increase revenue?

The key thing is not to throw out A/B tests that have inconclusive results, as this will happen quite often. This is a great opportunity to learn and create a better follow up A/B test. In particular you should gain visitor feedback regarding the page being A/B tested, and show them your variations – this helps reveal great insights into what they like and don’t like. Reviewing related visitor recordings and click maps also gives good insights.

Nick So of WiderFunnel talks about segments as well within his own process for AB test analysis:

“Besides the standard click-through rate, funnel drop-off, and conversion rate reports for post-test analysis, most of the additional reports and segments I pull are very dependent on the business context of a website’s visitors and customers.

For an ecommerce site that does a lot of email marketing and has high return buyers, I look at the difference in source traffic as well as new versus returning visitors. Discrepancies in behavior between segments can provide insights for future strategies, where you may want to focus on the behaviors of a particular segment in order to get that additional lift.

Sometimes, just for my own personal geeky curiosity, I look into seemingly random metrics to see if there are any unexpected patterns. But be warned: it’s easy to get too deep into that rabbit hole of splicing and dicing the data every which way to find some sort of pattern.

For lead-gen and B2B companies, you definitely want to look at the full buyer cycle and LTV of your visitors in order to determine the true winner of any experiment. Time and time again, I have seen tests that successfully increase lead submissions, only to discover that the quality of the leads coming through is drastically lower; which could cost a business MORE money in funnelling sales resources to unqualified leads.

In terms of post-test results analysis and validation — besides whatever statistical method your testing tool uses — I always run results through WiderFunnel’s internal results calculator which utilizes bayesian statistics to provide the risk and reward potential of each test. This allows you to make a more informed business decision, rather than simply a win/loss, significant/not significant recommendation.”

In addition to understanding how tested changes impacted each segment, it’s also useful to understand where in the customer journey those changes had the greatest impact, as Benjamin Cozon describes:

We need to consider that the end of the running phase of a test is actually the beginning of insight analysis.

Why is each variation delivering a particular conversion rate? In which cases are my variations making a difference, whether positive or negative? In order to better understand the answers to these questions, we always try to identify which user segments are the most elastic to the changes that were made.

One way we do it is by ventilating the data with session-based or user-based dimensions. Here is some of the dimension we use for almost every test:

User type (new / returning)

Prospect / new Client / returning client

Acquisition channel

Type of landing page

This type of ventilation helps us understand the impact of specific changes for users relative to their specific place in the customer journey. Having these additional insights also helps us build a strong knowledge base and communicate effectively throughout the organization.

Finally, while it is a great idea to have a rigorous quality assurance (QA) process for your tests, some may slip through the cracks. When you examine segments of your traffic, you may find one segment that performed very poorly. This may be a sign that the experience they saw was broken.

It is not unusual to see visitors using Internet Explorer crash and burn since developers abhor making customizations for that non-compliant browser.

How Did Changes Affect Lead Quality?

Post test analysis allows us to be sure that the quality of our conversions is high. It’s easy to increase conversions. But are these new conversions buying as much as the ones who saw the control?

Several of Conversion Sciences’ clients prizes phone calls and the company optimizes for them. Each week, the calls are examined to ensure the callers are qualified to buy and truly interested in a solution.

In post-test analysis, we can examine the average order value for each variation to see if buyers were buying as much as before.

We can look at the profit margins generated for the products purchased. If revenue per visit rose, did profit follow suit?

Marshall Downey of Build.com has some more ideas for us in the following instagraph infographic.

Revenue is often looked to as the pre-eminent judge of lead quality, but doing so comes with it’s own pitfalls, as Ben Jesson describes in his approach to AB test analysis.

If a test doesn’t reach significance, we quickly move on to the next big idea. There are limited gains to be had from adding complexity by promoting narrow segments.

It can be priceless to run on-page surveys on the winning page, to identify opportunities for improving it further. Qualaroo and Hotjar are great for this.

Lead quality is important, and we like to tackle it from two sides. First, qualitatively: Does the challenger page do anything that is likely to reduce or increase the lead value? Second, quantitatively: How can we track leads through to the bank, so we can ensure that we’ve grown the bottom line?

You might expect that it’s better to measure revenue than to measure the number of orders. However, statistically speaking, this is often not true. A handful of random large orders can greatly skew the revenue figures. Some people recommend manually removing the outliers, but that only acknowledges the method’s intrinsic problem. How do you define outlier, and why aren’t we interested in them? If your challenger hasn’t done anything that is likely to affect the order size, then you can save time by using the number of conversions as the goal.

After every winning experiment, record the results in a database that’s segmented by industry sector, type of website, geographic location, and conversion goal. We have been doing this for a decade, and the value it brings to projects is priceless.

Analyze AB Test Results by Time and Geography

Conversion quality is important, and Theresa Baiocco takes this one step further.

For lead gen companies with a primary conversion goal of a phone call, it’s not enough to optimize for quantity of calls; you have to track and improve call quality. And if you’re running paid ads to get those phone calls, you need to incorporate your cost to acquire a high-quality phone call, segmented by:

Hour of day

Day of week

Ad position

Geographic location, etc

When testing for phone calls, you have to compare the data from your call tracking software with the data from your advertising. For example, if you want to know which day of the week your cost for a 5-star call is lowest, you first pull a report from your call tracking software on 5-star calls by day of week:

Then, check data from your advertising source, like Google AdWords. Pull a report of your cost by day of week for the same time period:

Then, you simply divide the amount you spent by the number of 5-star calls you got, to find out how much it costs to generate a 5-star call each day of the week.

Repeat the process on other segments, such as hour of day, ad position, week of the month, geographic location, etc. By doing this extra analysis, you can shift your advertising budget to the days, times, and locations when you generate the highest quality of phone calls – for less.

Look for Unexpected Effects

Results aren’t derived in a vacuum. Any change will create ripple effects throughout a website, and some of these effects are easy to miss.

Craig Andrews gives us insight into this phenomenon via a recent discovery he made with a new client:

I stumbled across something last week – and I almost missed it because it was secondary effects of a campaign I was running. One weakness of CRO, in my honest opinion, is the transactional focus of the practice. CRO doesn’t have a good way of measuring follow-on effects.

For example, I absolutely believe pop-ups increase conversions, but at what cost? How does it impact future engagement with the brand? If you are selling commodities, then it probably isn’t a big concern. But most people want to build brand trust & brand loyalty.

We discovered a shocking level of re-engagement with content based on the quality of a visitor’s first engagement. I probably wouldn’t believe it if I hadn’t seen it personally and double-checked the analytics. In the process of doing some general reporting, we discovered that we radically increased the conversion rates of the 2 leading landing pages as secondary effects of the initial effort.

We launched a piece of content that we helped the client develop. It was a new client and the development of this content was a little painful with many iterations as everyone wanted to weigh in on it. One of our biggest challenges was getting the client to agree to change the voice & tone of the piece – to use shorter words & shorter sentences. They were used to writing in a particular way and were afraid that their prospects wouldn’t trust & respect them if they didn’t write in a highbrow academic way.

We completed the piece, created a landing page and promoted the piece primarily via email to their existing list. We didn’t promote any other piece of content all month. They had several pieces (with landing pages) that had been up all year.

It was a big success. It was the most downloaded piece of content for the entire year. It had more downloads in one month than any other piece had in total for the entire year. Actually, 28% more downloads than #2 which had been up since January.

But then, I discovered something else…

The next 2 most downloaded pieces of content spiked in October. In fact, 50% of the total year’s downloads for those pieces happened in October. I thought it may be a product of more traffic & more eyeballs. Yes that helped, but it was more than that. The conversion rates for those 2 landing pages increased 160% & 280% respectively!

We did nothing to those landing pages. We didn’t promote that content. We changed nothing except the quality of the first piece of content that we sent out in our email campaign.

Better writing increased the brand equity for this client and increased the demand for all other content.

Testing results can also be compared against an archive of past results, as Shanelle Mullin discusses here:

There are two benefits to archiving your old test results properly. The first is that you’ll have a clear performance trail, which is important for communicating with clients and stakeholders. The second is that you can use past learnings to develop better test ideas in the future and, essentially, foster evolutionary learning.

The clearer you can communicate the ROI of your testing program to stakeholders and clients, the better. It means more buy-in and bigger budgets.

You can archive your test results in a few different ways. Tools like Projects and Effective Experiments can help, but some people use plain ol’ Excel to archive their tests. There’s no single best way to do it.

What’s really important is the information you record. You should include: the experiment date, the audience / URL, screenshots, the hypothesis, the results, any validity factors to consider (e.g. a PR campaign was running, it was mid-December), a link to the experiment, a link to a CSV of the results, and insights gained.

Why Did We Get The Result We Got?

Ultimately, we want to answer the question, “Why?” Why did one variation win and what does it tell us about our visitors?

This is a collaborative process and speculative in nature. Asking why has two primary effects:

It develops new hypotheses for testing

It causes us to rearrange the hypothesis list based on new information

Our goal is to learn as we test, and asking “Why?” is the best way to cement our learnings.

21 Quick and Easy CRO Copywriting Hacks

Keep these proven copywriting hacks in mind to make your copy convert.

One of these A/B testing strategies is right for your website, and will lead to bigger wins faster.

We have used analysis and testing to find significant increases in revenue and leads for hundreds of companies. For each one, we fashion unique AB testing strategies for each defining where to start and what to test.

However, we will virtually always build out that unique testing strategy off one of seven core strategies that I consider fundamenal to CRO success.

If you are beginning your own split testing or conversion optimization process, this is your guide to AB testing strategies. For each these seven strategies, I’m going to show you:

When to use it

Where on the site to test it

What to test

Pitfalls to avoid

A real-life example

If you have more questions about your testing strategy, contact us and we’ll be more than happy to answer any questions I don’t cover here.

Let’s get started.

1. Gum Trampoline

We employ the gum trampoline approach when bounce rates are high, especially from new visitors. The bounce rate is the number of visitors who visit a site and leave after only a few seconds. Bouncers only see one page typically.

As the name implies, we want to use these AB testing strategies to slow the bouncing behavior, like putting gum on a trampoline.

We want more visitors to stick to our site and not bounce.

When to Use It

You have a high bounce rate on your entry pages. This approach is especially important if your paid traffic (PPC or display ads) is not buying.

You have run out of paid traffic for a given set of keywords.

Where to Test

Most of your attention will be focused on landing pages. For lead generation, these may be dedicated landing pages. For ecommerce sites, these may be category pages or product pages.

The offer that matches the ad, link or social post.

The form that allows the visitor to take action. This may be just a button.

The proof you use on the page that it’s a good decision.

The trust you build, especially from third-party sources.

The images you use to show the product or service. Always have relevant images.

Be Careful

Reducing bounce rate can increase leads and revenue. However, it can also increase the number of unqualified visitors entering the site or becoming prospects.

Example

In the following example, there is a disconnect between the expectation set by the advertisement (left side) and the landing page visitors see when they click on the ad (right side).

Paid ads are often a fantastic tool for bringing in qualified traffic, but if the landing page isn’t matched to the ad, visitors are likely to immediately bounce from the page rather than attempting to hunt for the treasure promised in the ad.

In order to apply gum to this trampoline, Zumba would need to take ad click-throughs to a page a featuring “The New Wonderland Collection”, preferably with the same model used in the ads. The landing needs to be designed specifically for the type of user who would be intrigued by the ad.

2. Completion Optimization

The Completion strategy begins testing at the call to action. For a lead-generation site, the Completion strategy will begin with the action page or signup process. For an ecommerce site, we start with the checkout process.

When to Use It

The Completion strategy is used for sites that have a high number of transactions and want to decrease the abandonment rate. The abandonment rate is the percentage of visitors who start a checkout or registration process, but don’t complete it. They abandon the process before they’re done.

Where to Test

This process starts at the end of the process, in the shopping cart or registration process.

What to Test

There are lots of things that could be impacting your abandonment rate.

Do you need to build trust with credit logos, security logos, testimonials or proof points?

Are you showing the cart contents on every step?

Do you require the visitor to create an account to purchase?

Do your visitors prefer a one-step checkout or a multi-step checkout?

Once you have reduced the abandonment rates, you can begin testing further upstream, to get more visitors into your optimized purchase or signup process.

Be Careful

Testing in the cart can be very expensive. Any test treatments that underperform the control are costing you real leads and sales. Also, cart abandonment often has its roots further upstream. Pages on your site that make false promises or leave out key information may be causing your abandonment rates to rise.

For example, if you don’t talk about shipping fees before checkout, you may have lots of people starting the purchase process just to find out what your shipping fees are.

Example

As we’ve talked about before, best practices are essentially guesses in CRO. We know, as a general rule, that lowering checkout friction tends to improve conversion rates and lower abandonment. But sometimes, it’s actually perceived friction that impacts the checkout experience above and beyond the real level of friction.

For example, one of our clients upgraded their website and checkout experience in accordance with best practices.

The process was reduced from multiple steps to a single step.

The order is shown, including the product images.

The “Risk-free Guarantee” at the top and “Doctor Trusted” bug on the right reinforces the purchase.

Trust symbols are placed near the call-to action button.

All costs have been addressed, including shipping and taxes.

The new checkout process should have performed better, yet it ended up having a significantly higher bounce rate than the previous checkout process.

Why?

After looking at previous checkout experience, we realized that despite it actually requiring more steps (and clicks) on the part of the user, the process was broken up in a such a way that the user perceived less friction along the way. Information was hidden behind each step, so that they user never ultimately felt the friction.

Step #1:

Paypal payment method step 1

Step #2:

Paypal billing information

This is just one of many reasons running AB tests is mandatory, and it’s also a good example of how beneficial it can be for certain business to start with the checkout process, as dictated by the long-term strategy.

3. Flow Optimization

The Flow approach is essentially the opposite of the Completion strategy. With this strategy, you’re trying to get more visitors into the purchase process before you start optimizing the checkout or registration process.

When to Use It

This strategy is typically best for sites with fewer transactions. The goal is to increase visits to the cart or registration process so we start Completion testing at the bottom of the funnel.

Where to Test

Testing starts on entry pages, the pages on which visitors enter the site. This will typically include the home page and landing pages for lead-generating sites. For ecommerce sites category pages and product pages get intense scrutiny to increase Add to Cart actions.

What to Test

With this strategy, we are most often trying to understand what is missing from the product or service presentation.

What questions are going unanswered?

What objections aren’t being addressed?

What information isn’t presented that visitors need?

Is the pricing wrong for the value presented?

We will test headlines, copy, images and calls to action when we begin the GT strategy.

Be Careful

Even though we aren’t optimizing the checkout or registration process, avoid testing clicks or engagement metrics. Always use purchases or leads generated as the primary metric in your tests. It’s too easy to get unqualified visitors to add something to cart only to see abandonment rates skyrocket.

For example, when Groove decided it’s 2.3% homepage conversion rate wasn’t going to cut it anymore, it began the optmization process by revamping its value proposition. The existing page was very bland, with a stock photo and a weak headline that didn’t do anything to address the benefits of the service.

Groove SaaS and eCommerce Customer Support Value Proposition

The new page included a benefits-driven headline and a well-produced video of a real customer describing his positive experience with Groove. As a result, the page revamp more than doubled homepage conversions.

Groove created a ‘copy first’ landing page based on feedback from customers

The point here is that fixing your checkout process isn’t going to do you a ton of good if you aren’t getting a whole lot of people there in the first place. If initial conversions are low, it’s better to start with optimizing your core value proposition than go fishing for problems on the backend of your funnel.

4. Minesweeper

Minesweeper optimization strategies use clues from several tests to determine where additional revenue might be hiding.

Some sites are like the Minesweeper game that has shipped with Windows operating systems for decades. In the game you hunt for open squares and avoid mines. The location of minds is hinted at by numbered squares.

In this game, you don’t know where to look until you start playing. But it’s not random. This is like an exploratory testing strategy.

When to Use It

This testing strategy is for sites that seem to be working against the visitor at every turn. We see this when visit lengths are low or people leave products in the cart at high rates. Use it when things are broken all over the site, then dive into one of the other strategies.

As testing progresses, we get clues about what is really keeping visitors from completing a transaction. The picture slowly resolves as we collect data from around the site.

Where to Test

This strategy starts on the pages where the data says the problems lie.

What to Test

By its nature, it is hard to generalize about this testing strategy. As an example, we may believe that people are having trouble finding the solution or product they are looking for. Issues related to findability, or “discoverability” may include navigation tests, site search fixes, and changes to categories or category names.

Be Careful

This is our least-often used strategy. It is too scattershot to be used frequently. We prefer the data to lead us down tunnels where we mine veins of gold.

However, this is the most common of optimization strategies used by inexperienced marketers. It is one of the reasons that conversion projects get abandoned. The random nature of this approach means that there will be many tests that don’t help much and fewer big wins.

Example

You wouldn’t expect a company pulling in $2.1 Billion in annual revenue to have major breaks in it’s website, yet that’s exactly what I discovered a few years back while attempting to make a purchase from Fry’s Electronics. Whenever I selected the “In-store Pickup” option, I was taken to the following error screen.

This is one of the most important buttons on the site, doubly so near Christmas when shipping gifts becomes an iffy proposition. Even worse, errors like this often aren’t isolated.

While finding a major error like this doesn’t necessarily mean you need to begin the Minesweeper optimization strategy, it’s always important to fix broken pieces of a site before you even begin to look at optimization strategies.

5. Big Rocks

Adding new features — “big rocks” — to a site can fundamentally change its effectiveness.

Almost every site has a primary issue. After analysis, you will see that there are questions about authority and credibility that go unanswered. You might find that issues with the layout are keeping many visitors from taking action.

The Big Rocks testing strategy adds fundamental components to the site in an effort to give visitors what they are looking for.

When to Use It

This strategy is used for sites that have a long history of optimization and ample evidence that an important component is missing.

Where to Test

These tests are usually site-wide. They involve adding fundamental features to the site.

What to Test

Some examples of big rocks include:

Ratings and Reviews for ecommerce sites

Live Chat

Product Demo Videos

Faceted Site Search

Recommendation Engines

Progressive Forms

Exit-intent Popovers

Be Careful

These tools are difficult to test. Once implemented, they cannot be easily removed from the site. Be sure you have evidence from your visitors that they want the rock. Don’t believe the claims of higher conversions made by the Big Rock company salespeople. Your audience is different.

Example

A good example of the Big Rocks strategy in action comes from Adore Me, a millennial-targeted lingerie retailer that catipulted it’s sales by installing Yotpo’s social-based review system. The company was relying primarily on email and phone for customer feedback and identified ratings and user reviews as its “big rock” to target.

The revamped customer engagement system helped spawn tens of thousands of new reviews and also facilitated a flood of user-generated content on sites like Instagram without Adore Me even having to purchase Instagram ads. Type in #AdoreMe and you’ll find thousands of unsponsored user-generated posts like these:

This is a great example of a how certain AB testing strategies can help facilitate different business models. The key is identify the big opportunities and then focusing on creating real, engaging solutions in those areas.

6. Big Swings

Taking big swings can lead to home runs, but can also obscure the reasons for wins.

A “Big Swing” is any test that changes more than one variable and often changes several. It’s called a big swing because it’s when we swing for the fences with a redesigned page.

When to Use It

Like the Big Rock strategy, this strategy is most often used on a site that has a mature conversion optimization program. When we begin to find the local maxima for a site, it gets harder to find winning hypotheses. If evidence suggests that a fundamental change is needed, we’ll take a big swing and completely redesign a page or set of pages based on what we’ve learned.

Sometimes we start with a Big Swing if we feel that the value proposition for a site is fundamentally broken.

Where to Test

We often take big swings on key entry pages such as the home page or landing pages. For ecommerce sites, you may want to try redesigning the product page template for your site.

What to Test

Big Swings are often related to layout and messaging. All at once, several things may change on a page:

Copy

Images

Layout

Design Style

Calls to Action

Be Careful

Big swings don’t tell you much about your audience. When you change more than one thing, the changes can offset each other. Perhaps making the headline bigger increased the conversion rate on a page, but the new image decreased the conversion rate. When you change both, you may not see the change.

Example

Neil Patel is one of those marketers who likes to use the Big Swings strategy on a regular basis. For example, he has tried complete homepage redesigns for Crazy Egg on several occasions.

The first big redesign changed things from a short-form landing page to a very long-form page and resulted in a 30% increase in conversions.

The next big redesign scrapped the long page for another short page, but this time with concise, targeted copy and a video-driven value proposition. This new page improved conversions by another 13%.

And of course, Neil didn’t stop there. Crazy Egg’s homepage has changed yet again, with the current iteration simply inviting users to enter their website’s URL and see a Crazy Egg’s user testing tools in action on their own site. How well is it converting? No clue, but if I know Neil, I can promise you the current page is Crazy Egg’s highest performer to date.

Sometimes the only way to improve conversions is to swing for the fences and try something new.

7. Nuclear Option

I’ll mention the nuclear option here, which is a full site redesign. There are only two good reasons to do an entire site redesign:

You’re changing to a new backend platform.

You’re redoing your company or product branding.

All other redesign efforts should be done with conversion optimization tests, like Wasp Barcode.

Every website is different. The approach you take when testing a site should ultimately be determined by the data you have. Once you settle on a direction it can help you find bigger wins sooner.

21 Quick and Easy CRO Copywriting Hacks

Keep these proven copywriting hacks in mind to make your copy convert.

There is no shortage of AB testing tips, tricks, and references to statistical significance. Here is a proven AB testing framework that guides you to consistent, repeatable results.

How do conversion optimization professionals get consistent performance from their AB testing programs?

If you are looking for a proven framework you can use to approach any website and methodically derive revenue-boosting insights, then you will love today’s infographic.

This is the AB testing framework industry professionals use to increase revenue for multi-million dollar clients:

The Purpose of an AB Testing Framework

It’s easy to make mistakes when AB testing. Testing requires discipline, and discipline requires guiding processes that enforce some level of rigor.

This framework ensures that you, the marketer-experimenter, keep some key principles in mind as you explore your website for increased revenue, leads, and subscriptions.

Don’t base decisions on bad data.

Create valid hypotheses.

Design tests that will make a difference.

Design tests that deliver good data.

Interpret the test data accurately.

Always ask, “Why?”

This is the framework CRO professionals use to stay on their best game.

1. Evaluate Existing Data

Here are the first two questions you need to ask when approaching a new site.

What data is currently available?

How reliable is this data?

In some cases, you will have a lot to work with in evaluating a new site. Your efforts will be primarily focused on going through existing data and pulling out actionable insights for your test hypotheses.

In other cases, you might not have much to work with or the existing may be inaccurate, so you’ll need to spend some time setting up new tools for targeted data collection.

Data Audit

The data audit identifies data that is available to the data scientist. It typically includes:

Behavioral analytics package

Existing customer data, such as sales

Marketing studies completed

UX Studies completed

Product Reviews

Live Chat Transcripts

Customer surveys completed

All of these data sources are helpful in developing a rich list of hypotheses for testing.

Analytics Audit

Since our analytics database is the all-important central clearinghouse for our website, we want to be sure that it is recording everything we need and accurately.

Often, we forget to track some very important things.

Popover windows are invisible to most analytics packages without some special code.

Links away from the site are not tracked. It’s important to know where your leaks are.

Tabbed content lets the visitor get specifics about products and is often not tracked.

Third-party websites, such as shopping carts, can break session tracking without special attention.

Interactions with off-site content are often masked through the use of iframes.

These issues must be addressed in our audit.

Integrations

It is important that as much data as possible is collected in our analytics database. We never know what questions we will have.

For post-test analysis (see below), we want to be sure our AB testing tool is writing information to the analytics database so we can recreate the test results there. This allows us to drill into the data and learn more about test subjects’ behaviors. This data is typically not available in our testing tools.

Data Correlations

Finally, we want to be sure that the data we’re collecting is accurate. For example, if our site is an ecommerce site, we want to be sure the revenue reported by our testing tool and analytics database is right. We will do a correlation calculation of the revenue reported by analytics with the actual sales of our company.

The same kind of correlation can be done for lead generation and phone calls.

We can also use multiple sources of data to validate our digital laboratory. Does the data in analytics match that reported by our testing tool? Is the number of ad clicks reported by our advertising company the same as the number seen in analytics?

Once we have confidence in our setup, we can start collecting more data.

2. Collect Additional Quantitative & Qualitative Data

Once we understand the data already available to us, we’ll need to set up and calibrate tools that can acquire any additional data needed to run effective split tests. For our testing tool, we may choose to run an AA test.

Two important types of data give us insight into optimizing a site.

Quantitative Data

Qualitative Data

Quantitative data is generated from large sample sizes. Quantitative data tells us how large numbers of visitors and potential visitors behave. It’s generated from analytics databases (like Google Analytics), trials, and AB tests.

The primary goal of evaluating quantitative data is to find where the weak points are in our funnel. The data gives us objective specifics to research further.

There are a few different types of quantitative data we’ll want to collect and review:

Backend analytics

Transactional data

User intelligence

Qualitative data is generated from individuals or small groups. It is collected through heuristic analysis, surveys, focus groups, phone or chat transcripts, and user reviews.

Qualitative data can uncover the feelings your users experience as they view a landing page and the motivations behind how they interact with your website.

Qualitative data is often self-reported data and is thus suspect. Humans are good at rationalizing how they behave in a situation. However, it is a great source of test hypotheses that can’t be discerned from quantitative behavioral data.

While quantitative data tells us what is happening in our funnel, qualitative data can tell us why visitors are behaving a certain way, giving us a better understanding of what we should test.

There are a number of tools we can use to obtain this information:

Session recording

Customer service transcripts

Interviews with sales and customer service reps

User testing, such as the 5-second test

3. Review All Website Baselines

The goal of our data collection and review process is to acquire key intelligence on each of our website “baselines”.

Sitewide Performance

Funnel Performance

Technical Errors

Customer Segments

Channel Performance

Sitewide Performance is your overall website user experience. It includes general navigation and performance across devices and browsers.

Funnel Performance deals specifically with the chain of conversion events that turns visitors into leads and then customers. It will include landing pages, optin forms, autoresponders, cart checkouts, etc.

Technical Errors are the broken parts on your website or elsewhere in the user experience. These don’t need to be optimized. They need to be fixed.

Customer Segments deals with how different key customer segments are experiencing your site. It’s important to understand the differences in how long-time users, new visitors, small-ticket buyers, and big-ticket purchasers are engaging with your site.

Channel Performance deals with how various traffic acquisition channels are converting on your site. It’s important to understand the differences between how a Facebook-driven view costing you $0.05 and an Adwords-driven view costing $3.48 is converting when they reach your site.

4. Turn Data Into Optimization Hypotheses

Once you have a thorough, data-backed understanding of the target website, the next step is to design improvements that you hypothesize will outperform the current setup.

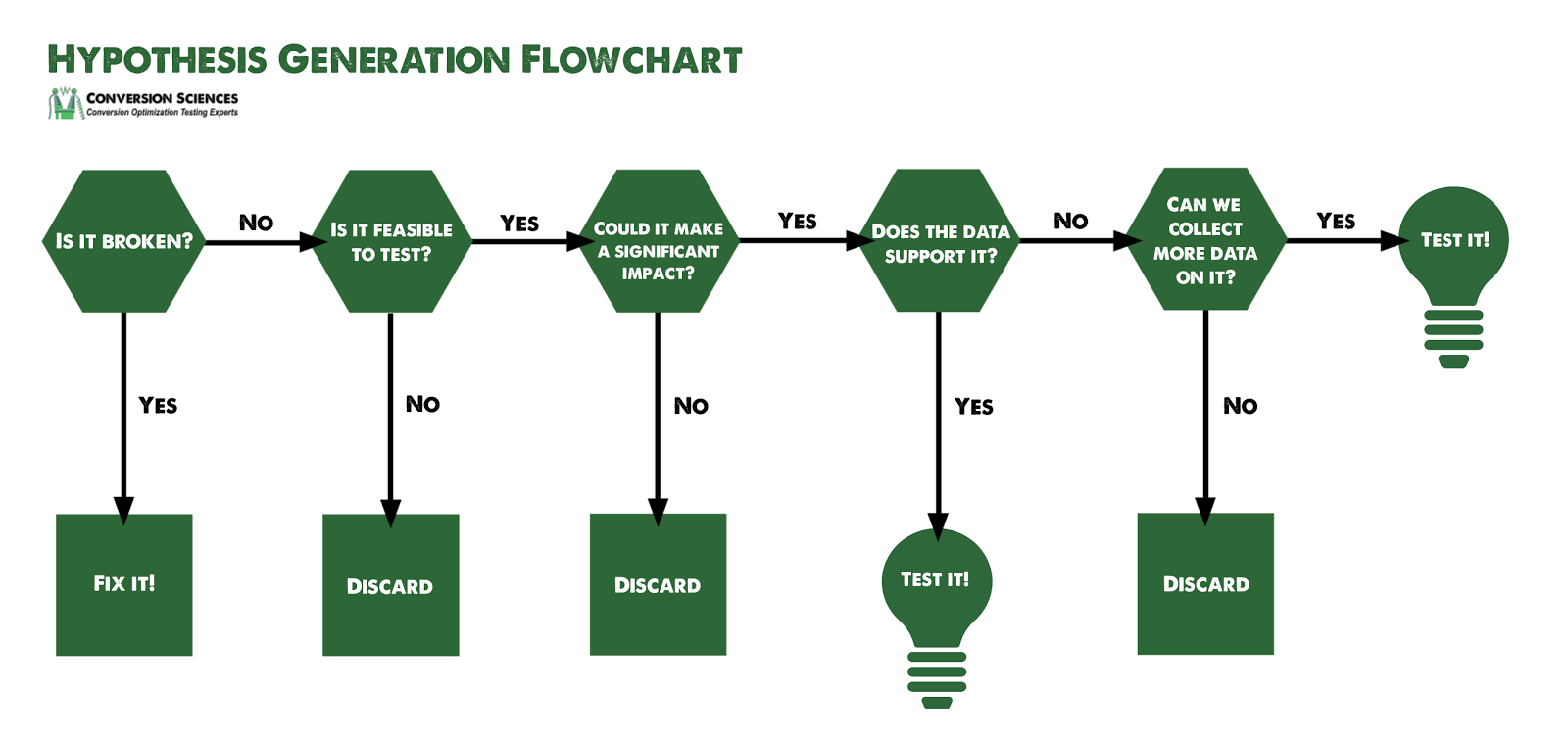

As you evaluate these changes for potential testing, run them through the following flowchart: You’ll quickly build a list of potential changes to test, and then you’ll need to prioritize them based on your overall testing strategy.

5. Develop A Testing Strategy

AB testing is a time-consuming process that consumes limited resources. You can’t test everything, so where do you focus? That will depend on your testing strategy.

Ultimately, you will need to develop a tailored strategy for the specific website you are working with and that website/business’ unique goals, but here are a few options to choose from.

Flow vs. Completions

One of the first questions you’ll have to ask is where to start. There are two broad strategies here:

Increase the flow of visits to conversion points (shopping cart, registration form, etc.)

Increase the completions (the number of visitors who finish your conversion process by buying or registering)

If you find people falling out of the top of your funnel, you may want to optimize there to get more visitors flowing into your cart or registration page. This is a flow strategy.

For a catalog ecommerce site, flow testing may occur on category or product pages. Then, tests in the shopping cart and checkout process will move faster due to the higher traffic.

Gum Trampoline Strategy

Employ the gum trampoline approach when bounce rates are high, especially from new visitors. The bounce rate is the number of visitors who visit a site and leave after only a few seconds. Bouncers only see one page typically.

With this strategy, you focus testing on landing pages for specific channels.

Minesweeper Strategy

This strategy is for sites that seem to be working against the visitor at every turn. We see this when visit lengths are low or people leave products in the cart at high rates.

For example, we might try to drive more visitors to the pricing page for an online product to see if that gets more of them to complete their purchase.

Big Rocks Strategy

This strategy is used for sites that have a long history of optimization and ample evidence that an important component is missing. Add fundamental components to the site in an effort to give visitors what they are looking for.

Examples of “big rocks” include ratings and reviews modules, faceted search features, recommendation engines, and live demos.

Nuclear Strategy

This strategy includes a full site redesign and might be viable if the business is either changing its backend platform or completely redoing branding for the entire company or the company’s core product.

The nuclear strategy is as destructive as it sounds and should be a last resort.

But even with the right tools, designing an AB test requires a decent amount of work on the user’s end. Tests need to be designed correctly if you want to derive any meaningful insights from the results.

One piece of this that most people are familiar with is statistical significance. Unfortunately, very few people actually understand statistical significance at the level needed to set up split tests. If you suspect that might be you, check out AB Testing Statistics: An Intuitive Guide For Non-Mathematicians.

But there’s a lot more to designing a test than just statistical significance. A well-designed AB test will include the following elements:

Duration – How long should the test run?

Goal – What are we trying to increase?

Percentage of traffic – What percentage of our traffic will see the test?

Targeting – Who will be entered into the test?

Treatment Design – The creative for the test treatments.

Test Code – Moves things around on the page for each treatment.

Approval – Internal approval of the test and approach.

Tests should be set up to run for a predetermined length of time that incorporates the full cycle of visitor behavior. A runtime of one calendar month is a good rule of thumb.

Test goals, targeting, a display percentages should all be accounted for.

Once the test is designed properly, it’s finally time to actually run it.

7. Run & Monitor Your AB Tests

Running an AB test isn’t as simple as clicking “Run” on your split testing software. Two critical things need to happen once the test begins displaying page variations to new visitors.

Monitor initial data to make sure everything is running correctly

Run quality assurance throughout the testing period

Once the test begins, it’s important to monitor conversion data throughout the funnel, watch for anomalies, and make sure nothing is set up incorrectly. You are running your tests on live traffic, after all, and any mistake that isn’t quickly caught could result in massive revenue loss for the website being tested.

As the tests run, we want to monitor a number of things. For instance:

Statistical significance

Progression throughout the test

Tendency for inflated testing results

Quality of new leads

Conversion rate vs. revenue

Statistical significance is the first thing we have to look at. A statistically insignificant lift is not a lift. It’s nothing.

But even if our results are significant, we still have to look at the progression of data throughout the testing process. Did the variant’s conversion rate stay consistently higher than the control? Or did it oscillate above and below the control? If the data is still oscillating at the end of the test period, we might need to continue testing, even if our software is telling us the results are statistically significant.

It’s also important to understand that any lift experienced in testing will almost always be overstated. On average, if a change creates a 30% lift in testing, the actual lift is closer to 10%.

Finally, it’s helpful to run quality assurance throughout the test period, ensuring that split tests are displaying properly across various devices and browsers. Try to break the site again, like you did during the initial site audit, and make sure everything is working.

Once the tests have run through the predetermined ending point, it’s time to review the results.

8. Assess Test Results

Remember that an AB test is just a data collection activity. Now that we’ve collected some data, let’s put that information to work for us.

The first question that will be on our lips is, “Did any of our variations win?” We all love to win.

There are two possible outcomes when we examine the results of an AB test.

The test was inconclusive. None of the alternatives beat the control. The null hypothesis was not disproven.

One or more of the treatments beat the control in a statistically significant way.

In the case of an inconclusive test, we want to look at individual segments of traffic. How are specific segments of users engaging with the control versus the variant? Some of the most profitable insights can come from failed tests.

Segments to compare and contrast include:

Return visitors vs. New visitors

Chrome browsers vs. Safari browsers vs. Internet Explorer vs. …

Organic traffic vs. paid traffic vs. referral traffic

Email traffic vs. social media traffic

Buyers of premium products vs. non-premium buyers

Home page visitors vs. internal entrants

These segments will be different for each business, but they’ll provide insights that spawn new hypotheses or even provide ways to personalize the experience.

In the case of a statistical increase in conversion rate, it’s very important to analyze the quality of new conversions. It’s easy to increase conversions, but are these new conversions buying as much as the ones who saw the control?

Ultimately, we want to answer the question, “Why?” Why did one variation win, and what does it tell us about our visitors? This is a collaborative process and speculative in nature. Asking why has two primary effects:

It develops new hypotheses for testing

It causes us to rearrange the hypothesis list based on new information

Our goal is to learn as we test, and asking “Why?” is the best way to cement our learnings.

9. Implement Results: Harvesting

This is the step in which we harvest our winning increases in conversion, and we want to get these changes rolled out onto the site as quickly as possible. The strategy for this is typically as follows:

Document the changes to be made and give them to IT.

IT will schedule the changes for a future sprint or release.

Drive 100% of traffic to the winning variation using the AB testing tool. We call this a “routing test.”

When the change is released to the site by IT, turn off the routing test.

It is not unusual for us to create a new routing test so that we can archive the results of the AB test for future reference. As another consideration, beware of having too many routing tests running on your site. We’ve found that some smaller businesses rely on the routing tests to modify their test and have dozens of routing tests running. This can cause a myriad of problems.

In one case, a client made a change to the site header and forgot to include the code that enabled the AB testing tool. All routing tests were immediately turned off because the testing tool wasn’t integrated.

Conversion rates plummeted until the code was added to the site. In one sense, this is a validation of the testing process. We’ve dubbed it a “Light Switch” test.

Conclusion

This is the framework CRO professionals use to consistently generate conversion lifts for their clients using AB testing.

Here are six different ways to AB test content elements and the things you should be measuring.

There is a critical part of your sales funnel that probably isn’t optimized.

When you think about CRO, you think about optimizing your online funnel – your emails, landing pages, checkout process, etc. – in order to acquire more customers.

What you don’t often think about is AB testing your content.

In fact, when it comes to content driven marketing, we rarely see the same commitment to testing, tracking, and optimizing that occurs elsewhere in marketing. Considering that content is found at the top of your sales funnel, the wrong content could be hurting your conversion rates.

Content can be tested in the same way anything else can be tested, and some elements definitely deserve a more CRO-esque approach.

Goals for AB Testing Content

One of the reasons that content is less-frequently tested, is that the goals are often unclear.

Content is great for SEO.

Content is great for educating clients.

Content is great for establishing your brand’s thought leadership.

Content is great for sharing on social media.

Content is also great for getting prospects into the sales funnel. This is typically done by collecting an email address to begin the conversation.

Here are the 6 different elements you should definitely consider testing. You can run any of these tests using these recommended AB testing tools, but I’ve also included some simple WordPress plugins as a viable alternative if you want to try this on a small budget.

1. Split Test Your Headlines

Your headline is arguably the most important piece of your content. It’s the thing that determines whether people click through from your email or social media post.

On average, 80% of your readers never make it past the headline.

Yup, only 2 in every 10 of your readers actually read past the headline. Even fewer make it all the way through the article.

Funny enough, it’s also one of the simplest things to test. It’s so easy.

You already know how to run an AB test. Applying that practice to your headlines is a simple 4-step process. 1. Brainstorm headlines to test. Stephanie Flaxman of CopyBlogger says you should ask yourself three questions to make your headline the best it can be:

Who will benefit from this content?

How do I help them?

What makes this content special?

Use your answer to those three questions to craft a headline that will demand viewer attention and channel readers to your content.

But don’t get too excited – The first headline you come up with will probably suck. Or maybe it will just be mediocre.

The whole point of AB testing is that you don’t have to come up with THE perfect headline. You simply need to come up with a variety of solid options, and then you can see what performs best.

This is why I recommend creating a list of 5-10 possible headlines.

Next, pick your two favorites and move on to step #2. 2. Send both versions to a live audience.

Now it’s time to test the headlines. You want to show one headline to 50% of your traffic and the other headline to the other 50%.

How you accomplish this will depend on how you acquire traffic.

For example, if you primarily drive traffic to your new blog posts via an email list, create your email and then send half of your subscribers the email using one headline and the other half the same email but using the alternate headline.

If your promote via social media, try posting at different times or across different channels using the alternate headlines and see what happens.

If you promote via paid channels, simply create two ads, using a different headline for each, and set up a normal AB test using proper statistical analysis.

Once you’ve run your tests, it’s time to review the data. 3. Analyze the results.

Which headline performed the best?

If your traffic is too low to get statistically significant results, it’s still worth running the tests. Your initial readers typically come from your email list or your most active social followers – aka the people most likely to share your content. Getting a feel for what they respond to is always worthwhile, and you might notice certain trends over time. 4. Implement the one with the most clicks.

Once you have your winner, simply set it as your permanent headline. That’s all there is to it.

But your headline isn’t the only thing that gets people to click.

2. Split Test Your Featured images

Content Marketing Institute, the industry leader in content marketing, found that “ad engagement increased by 65% when a new image was tested versus simply testing new copy.”

Brian Massey summarizes it well here, “Spend as much time on your images as your copy.”

Whether you’re using paid ads in your content marketing strategy or not, the image matters almost as much as the headline (maybe more).

So, how does one select the right featured image?

There is some science behind choosing a featured image. If you think about it, picking one image is harder than picking several. So, pick a couple and let your test results decide for you.

Here are three keys that will help guide your selection. 1. Pick something compelling

Your image should relate to whatever your article is about. That said, being relevant is pretty ambiguous.

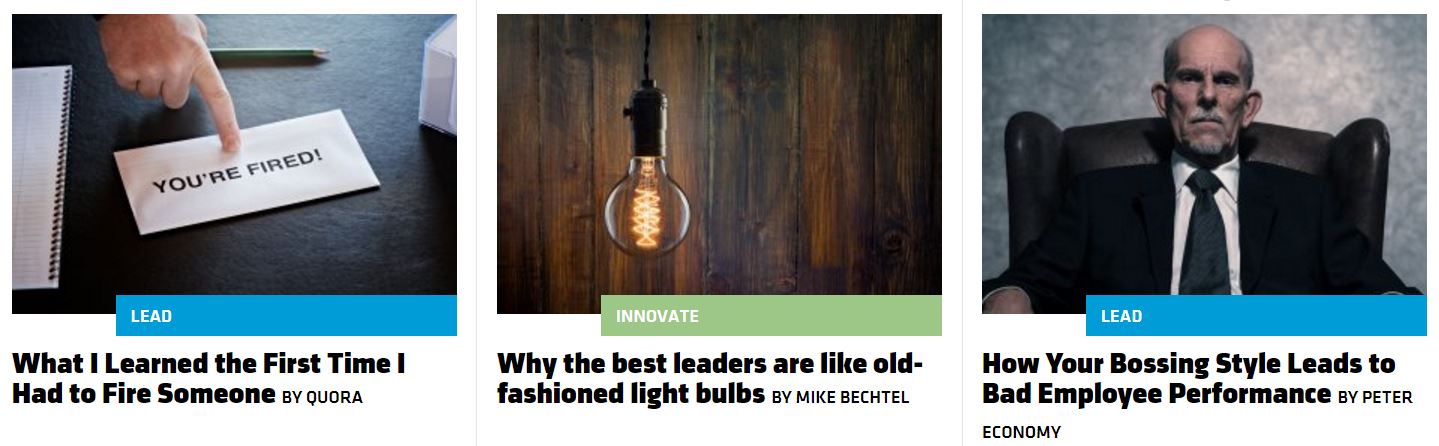

This article from Inc is not directly relevant to the content, but our brains are designed to make connections.

As long as you can relate it in some way, you’re probably OK, but you want your image to be compelling. Not any relevant image will do. Roy H. Williams, director of the business school The Wizard Academy, outlines a number of techniques that make images compelling.

Silhouettes: We tend to fill in silhouettes with ourselves or our aspirational dreams.

Portals: Our attention is drawn into doorways, tunnels, windows and openings.

Cropped Images: When we are only given a piece of the image, we fill in the missing parts.

Faces: We stare at the human face. This can work against our headlines. Pro tip: If you use a human face, have them looking at your headline for best resuts.

The above image may not be highly relevant, but it’s use of a silhouette is compelling. 2. Make sure it is relevant to the post

Your headline and featured image should work together to be both relevant and compelling.

Let’s look at some other examples from Inc.

Do you see how they combine relevant images with compelling headlines? It makes it hard not to click on the article.

Finally, the third important factor to consider when choosing an image is… 3. Always use high-quality images

I know you already know this, but I wanted to remind you. Nothing grinds my gears more than a blog post with a terrible, grainy image.

Once you’ve chosen your images, go ahead and start splitting your traffic.

Now you know how to optimize individual posts for conversions, but what about a more general approach to your overall content marketing strategy?

The next element you should be testing is content length.

3. Find Your Ideal Content Length

Now we’re getting into the overall content creation process. Testing your ideal content length will give you an idea to help you create a content template for all your articles going forward.

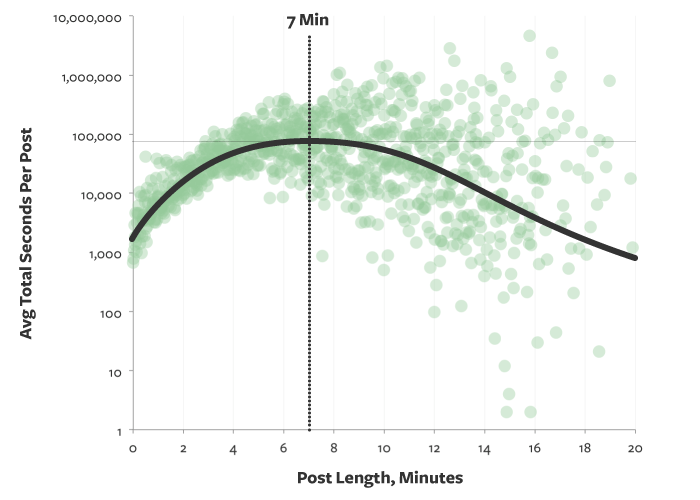

According to a study done by Medium, the current ideal content length is about 1,600 words; or, around a 7-minute read.

However, this may not be the case for you.

Yes, the average posts that get the most shares are long, in-depth posts. But that doesn’t mean shorter posts don’t get shares as well. And more importantly, that doesn’t mean shorter posts won’t do a better job of driving qualified leads to your business.

The only way to know the optimum length of posts for your audience is to test it. In order to test the ideal length, you can take two different approaches.

The first and simplest option is to try a variety of content lengths over time and look for trends. You could publish a 1,500 word post one week, a 400 post the next week, a 4,000 word guide the following week, and an infographic the 4th week. Rinse and repeat. You should be testing out different content types anyway, and incorporating varying lengths of content within that schedule won’t require much more effort on your part.

The data you want to measure — time on page — is found easily in Google Analytics. This is a free analytics tool that any content marketer should become familiar with.

The second option is to split test a single post by sending segments of users to different length versions of the same post.

In similar fashion, test video length for views and watch times to see how long your videos should be.

4. Take Your Opt-in Forms Seriously

Opt-in or signup forms are a critical part of content marketing and sales funnels. It’s important that they are converting at the highest rate possible.

So what parts of your opt-in form can you test?

First, test the form length.

I’ve seen forms that ask for the whole shebang; everything from your full name to your phone number and more.

Believe it or not, this can work. Just take HubSpot for example. They have a ridiculous amount of free downloads, from templates to eBooks, and every one of them comes with a form like this:

HubSpot Form.

I put three pages into one image because it was too big to fit in one screenshot!

Here’s the kicker: They see tremendous success with this behemoth of a form. I’ve personally filled out at least a half dozen of their forms like this for free downloads.

So, what’s the ideal form length?

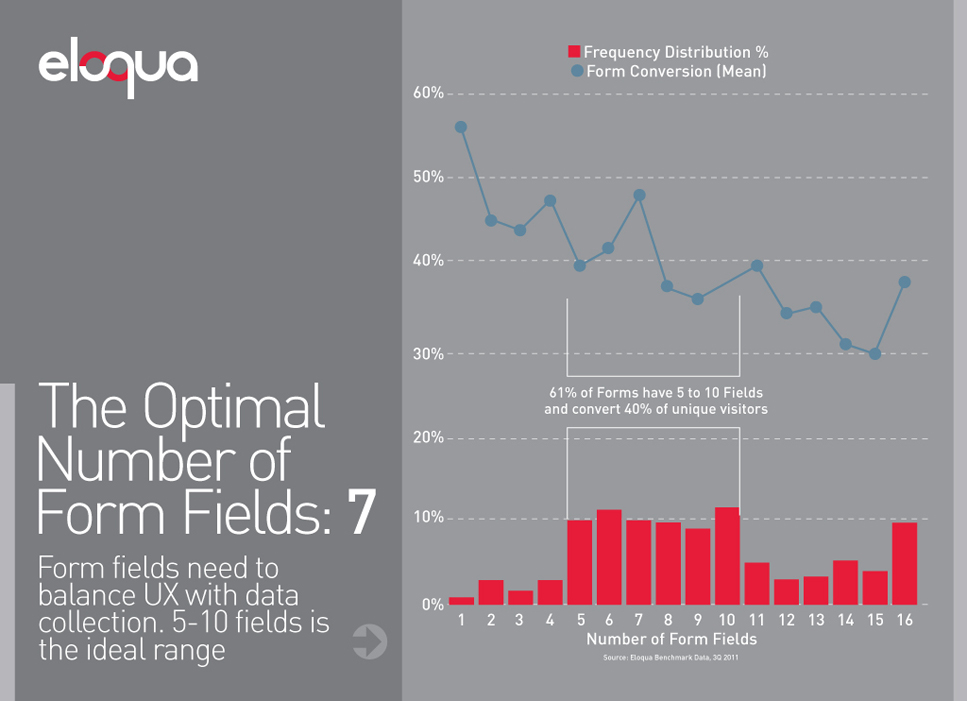

Well, take a look at this chart by eloqua.

It seems the optimal number of fields is 7 because you’re getting the most information with the least drop off in conversions.

That said, you can potentially get close to a 60% conversion rate when asking for only a single piece of information.

Oddly enough, the data above suggest that having 7 form fields is better than having only 2. While this is just one study, it could mean that you’ve been asking for too little information and might want to revisit your opt-in forms.

Again, it’s all about testing.

In general, the more form fields you have, the lower your conversion rate will be, but the quality of your list will be.

Once you’ve determined the optimal number of form fields, it’s time to test location. Test placement on the page.

Typically, forms are located:

Place it at the top to clearly indicate that they must complete a form.

Place it at the bottom so that they can take action after consuming your content.

Place it in the sidebar, which is where readers look when they want to subscribe.

Where you place your offers is as important as the length of your forms.

Try multiple locations. Personally, I like to include one in the sidebar, one on exit intent, and one either in the middle of or at the end of my content.

Don’t overwhelm your visitors with too many choices. If you have four different opt-ins, some call-to-actions, related posts, and other things to click on, they may just leave your page altogether.

5. Split Test Your CTAs

Whenever you create a piece of content on your website, be it a blog post, a landing page, or even an about page, you should always ask yourself this question:

Where do we want our readers to do after reading this content?

In other words, “Where are we sending them next?”

A lot of people have no idea how to answer that question. I mean, it’s not obvious – especially when you have a lot of content you could send them to.

You might have any one (or more) of these CTAs in your content:

A lead magnet

Related blog posts

A “start here” page

A sales pitch/landing page

An initial consultation call

A content upgrade

An email subscription

How do you know where to send them?

The answer: Send them to the next stage in your funnel

Depending on your marketing strategy, this might mean immediately collecting a lead, or it could be something else.

Let me give you an example. ChannelApe provides integrations between the systems ecommerce websites use to run their business. ChannelApe offers a free trial for their automatic supplier integration as the next step for anyone reading their list of dropshippers.

This makes sense because anyone interested in a list of dropshippers is probably also interested in integrating those dropshipper’s products with their store.

Notice how ChannelApe uses a bright orange background to help their CTA stand out from the rest of their content. Color is only one of the variables you should test on your CTAs.

In addition to CTA colors, you can also test:

Copy

Images

Offers

Positions

OK, let’s say you want to test the position of your related posts.

I know what you’re thinking.

“Bill, wouldn’t I just put related posts at the end of a blog post?”

Maybe. But what if your readers aren’t getting to the end? You don’t want them to leave, do you?

For that matter… what’s “related”? Are you tagging your posts and pages properly?

And what about the posts getting the most interaction? Don’t you think your readers would like to see those?

Or do you want to drive traffic to certain pages over others, like a “start here” page or a new blog series?

Do you see where I’m going with this?

The process of CRO, be it in your content marketing campaigns, your landing pages, or anywhere else, involves asking yourself questions about your readers in order to better understand how to help them.

Simply repeat this process of asking questions for every variable you may want to include, then put your answers to the test.

Conclusion: AB Test Your Content

Let’s recap:

You want your headlines and featured images to be relevant and compelling.

The “ideal” content length is 1,600 words, but you shouldn’t blindly follow that number.

The position and length of opt-in forms matters.

Always know where you want your visitors to go next in order to effectively use CTAs.

If there’s one thing you should take away from this post, it’s this:

The performance of your content is no less important than any other stage in your funnel. Always test the elements of your content by asking yourself relevant questions about your readers.

Have you ever tried to split test elements of your content before? I’d love to hear. Leave a comment below and let me know!

Bill Widmer is a freelance writer and content marketer. With over two years of experience, Bill can help you get the most out of your content marketing and blog.

10 successful value proposition examples proven by AB testing.

Conversion Sciences has completed thousands of tests on websites of all kinds for businesses of all sizes. At times, we’ve been under pressure to show results quickly. When we want to place a bet on what to test, where do we turn?

Copy and images. These are the primary components of a website’s value proposition.

It’s the #1 factor determining your conversion rate. If you deliver a poor value proposition, there is little we can do to optimize. If you nail it, we can optimize a site to new heights.

So, I have to ask: have you ever taken the time to split test your value proposition?

This article shows you how to identify a poor value proposition, hypothesize a series of better alternatives, and split test them to identify the wining combination of copy, video and images.

Essential Qualities Of A Worthwhile Value Proposition

Your value proposition is the promise you make to prospective customers about the unique value your business will deliver to them.

Your value proposition is a statement, which can be made up of the following elements:

Headline

Subheadline

Copy

Bullet points

Images or Graphics

Video

Words carry tremendous power, but they aren’t the only element you can employ in promising defined value to potential customers. A value proposition can be made up of any of the above elements, as well as others I’ve no doubt failed to mention.

To be effective, your value proposition should include the following characteristics:

Conveys a clear, easily understood message

Speaks to the unique value your business provides

Explicitly targets a specific audience segment

Makes a clear promise regarding the benefits being delivered

Hopefully, these criteria are making you aware of what your value proposition is not. It is not a slogan, creative phrase, or teaser.

The best way to demonstrate this is to show you some real examples of businesses that improved their conversion rates by upgrading their value propositions.

Let’s get started.

Example #1: Groove Increases Conversions By 104%

Groove is simple help desk software. It’s a streamlined product designed to help smaller teams provide personalized customer support without learning and configuring something more complicated like Zendesk.

Groove SaaS and eCommerce Customer Support Value Proposition

After reaching out to several experts for help, they received the following advice:

“You’re talking to your customers the way you think marketers are supposed to talk. It’s marketing-speak, and people hate that… you should talk like your customers do”

With this in mind, the Groove team spent some time talking to various customers over the phone in order to get a feel for how those customers were talking about Groove and the actual words they were using.

They also changed their opening autoresponder email to the following, which ended up generating an astounding 41% response rate and becoming a prime, continuous source of qualitative data for the business:

Groove welcome email established their value proposition.

As a result of this feedback, they created a new “copy first” landing page, with a completely revamped value proposition.

Groove created a ‘copy first’ landing page based on feedback from customers

After testing the new page against the original, Groove found that it converted at 4.3% for an 87% improvement. After running additional tests with more minor tweaks over the next two weeks, the conversion rate ultimately settled at 4.7%, bringing the total improvement to 104%.

Key Takeaways

So what can we learn from Groove’s big win?

Benefit-driven headlines perform better than headlines simply stating the product category.

The subheading is not a good place for a testimonial. You need to explain your value before you bring in proof to verify your claims.

Notice how the new headline explains a bit of the “how and what” while still keeping the customer in focus.

While Groove doesn’t explicitly define the target audience within the headine and subheading, they do accomplish this via the above-the-fold bullet point and video testimonial.

Example #2: Comnio Increases Signups By 408%

Comnio is a reputation management company that helps both consumers and businesses resolve customer service issues.

After transitioning away from a moderately NSFW branding strategy, the company needed a new way to communicate it’s value and attract users. After the page below failed to convert, they contacted Conversion Sciences’ Brian Massey for a CRO strategy consultation.

“My recommendations were to focus on the company less and on what will happen more and to use a hero image that is more relevant. By September 2015, the homepage was taking a different approach, focusing on the service value and defining the steps that make it work.”

Comnio’s new landing page performed at a high rate

This new page was a definite improvement over the previous version, and over the next 30 days, it converted a respectable 3.6% of site visits.

That said, there were still some clear problems, the most obvious being that the opening headline and subheadline were failing to make a clear promise. In order to optimize this page, Comnio implemented the following changes:

Changed the headline to explain what they do (as a benefit, not a feature)

Changed the subheadline to explain the pains/problems Comnio solves for users

Changed the email field placeholder text from “Email address” to “Enter your email address”

Changed the CTA button from “Sign up for free” to “Try Comnio For Free”

Added social sign-up options

Swapped out the position of company logos with the position of user testimonials

Added a gradient line below the hero shot to separate it from the rest of the page

The new page looked like this:

Comnio further refined the landing page with a significantly higher conversion rate

Thanks in large part to a strong headline, this new page converted at an incredible 18.3% over its 30-day test, a 408% increase over the previous version.

It’s also worth noting that 49% of new signups used one the social signup options available on the new page.

Key Takeaways

So what can we learn from Comnio’s huge conversion spike? Whenever this many changes are implemented in one test, it hurts our ability to make specific conclusions, but here’s what I’m seeing:

The new headline isn’t cute, catchy, or cool. It’s a simple, definitive statement, and that’s exactly why it works so well.

Directly addressing emotional customer pain points (no waiting, no repeating yourself) within your value proposition can have a MASSIVE impact on your conversion rate.

Signup friction can significantly decrease your conversion rate. Considering half signups on the new page occurred via the social buttons, it would make sense to assume this feature was a big part of the conversion boost.

Brian also noted that the social signup buttons themselves could have served as social proof, borrowing trust from Facebook and Twitter.

Example #3: Udemy Increases Clicks By 246%

Udemy is a massive marketplace for online courses on everything you can imagine.

And while the company’s meteoric growth is certainly a testament to their product-market fit and understanding of their own value proposition, until somewhat recently, the individual course pages were very poorly optimized.

Until this last year, Udemy course pages looked like this:

Udemy landing page that needed higher conversion rates

If I’m trying to sell my course via this page, there are a number of major problems diminishing my conversion rate.

Udemy is essentially stealing the headline of the page with it’s bold “You can learn anything…” banner. If I’m on this page, I either clicked here through a direct-link or through Udemy’s browser, and in neither case, does it make sense to tell me about Udemy’s 10,000 courses.

With 3 columns, I have no clue where to look first. Where is the value proposition?

I can barely even tell the green rectangle on the right is supposed to be a CTA button.

While Vanessa’s course does have a value proposition, it certainly isn’t laid out in a way that makes it defined or obvious.

Udemny redesigned landing page employing user testing