Last year, Conversion Sciences and I teamed up to prove that Austin, Texas, is the Conversion Capital of the World.

While some may disagree, evidence still points to this unique claim to fame for the capital city of Texas.

For those who aren’t sure where Austin is, we’re providing a map.

Conversion Optimization Experts and Austin: Weirdly Connected

At first glance, conversion optimizers can seem like an elite group. Even for marketers who know they should be testing, the practice of hypothesizing and measuring feels like it should be reserved for brainy number-nerds.

But if you look closely, there’s an incredible diversity among conversion experts.

True, some of them were doing advanced calculations before most of us could count, but many got their start in unlikely places: advertising, SEO and even the golf course.

What’s most interesting is that they seem to be drawn to this one location: a cluster of entrepreneurial, music-loving, taco-eating, results-oriented marketers.

Noah Kagan, a transplant from the Silicon Valley explains the phenomenon in this TechCrunch video. He says people come to visit and realize, “Wow! This is a great culture: good lifestyle, affordable, the people are friendly… .”

That explains why they’re drawn here, but one of the reasons they stay (and flourish) is the very thing that makes Austin unique.

The city’s tag line is “Keep Austin weird.” Its hallmark: diversity. This is a place that rewards creativity and out-of-the-box thinking. So it’s the perfect place for smart people to make their mark and still enjoy life.

“In Austin, we wanna have lives,” says Noah. And apparently, a lot of smart conversion optimizers would echo that sentiment.

Like it or not, agree or not, Austin has become prime real estate for conversion rate optimizers. Last year, we asked local experts “why Austin?” This year, we decided to get a little more personal.

- What is your origin story?

- What made you finally decide that data-driven marketing was important?

- How did Austin play into that?”

Here’s what they told us.

Brian Massey

I was trained as a computer scientist, and wound my way through sales and into marketing. Through this journey, data-driven marketing came pretty naturally to me. I was a marketer with a scientist’s training. I wasn’t going to settle for guessing about what worked on the Web.

In 2001 I wrote my own analytics package, which I think you can still find on SourceForge.net. However, my employers didn’t really know what to make of the marketing guy who was spending his time coding. At the time, there wasn’t a word or a discipline or an industry for what I was doing. I had a hard time describing the method to my madness.

It was a “Wizards of the Web” seminar by Bryan and Jeffery Eisenberg (see below) that sealed it for me. They showed me how science and marketing could work as one.

Conversion optimization brought my talents and neuroses together.

In 2007, I started Conversion Sciences and began teaching the world about this new set of disciplines. Many businesses get it now, and we have been having a lot of fun understanding crazy people they call their visitors.

How does Austin play into this? Aside from the unusual business school, the Wizard Academy here, Austin has the “Creative Class” we need to make websites awesome. When you combine low cost of living with high-value talent, it’s a great place to run a conversion optimization company.

Joel Harvey

My first experience with using data to make money came when I was ten years old.

I started collecting sports cards and fell in love with both the statistics and the potential to make money

by understanding the relationship of those statistics and the market value of a particular player or card and leveraging that knowledge in trade negotiations with other collectors.

There was nothing more thrilling than using insights from data to engage in a profitable trade. By the time I lost interest in sports cards (about three years later), I had turned several hundred dollars into a collection worth several thousand dollars.

I was hooked.

A handful of years later I started building and monetizing websites. This was before Google Website Optimizer or any early A/B testing tools, but I knew that in order to maximize profits, I would need to try different types of content.

Essentially that meant running pre/post tests and keeping a sharp eye on the bottom line to see if it was moving in the right direction. I quickly realized how powerful (and profitable) even small changes to a page could be and have been hooked on optimization ever since.

How Austin plays into this: About eight years ago my digital marketing career was really starting to blossom. However, I was living in Albuquerque, New Mexico at the time and found that there was a lack of mentors, collaborators and overall talent for me to tap into. I needed to move somewhere that had a thriving digital ecosystem and, after deep analysis, Austin topped the list. I haven’t looked back since.

Jeffrey and Bryan Eisenberg

After almost two decades in the CRO game, we moved to Austin, the city whose businesses live by the slogan “Keep Austin Weird”. The move turned out to be a catalyst for many positive changes, personally and professionally.

Austin has truly become the CRO capital of the world. It is full of brilliant CRO practitioners. Over beers a few years ago, our colleagues inspired us to look for a better solution to shared frustrations.

“Keep Austin Weird” had been started to promote local businesses, those who deeply understand and strive to delight Austin’s population. These businesses are fiercely committed to succeed despite the advantages offered by the homogeneity of large national chains.

Bryan and I have worked together with a prestigious list of blue-chip clients to achieve well over $2 Billion in CRO wins. We have trained thousands of people in CRO and written several books, including two New York Times best sellers about CRO.

Nevertheless we share the frustration that CRO, as too many companies practice it today, is a dead end.

It was in Austin that we shifted our focus away from traditional CRO. We pioneered CRO as a study of tactics to increase conversions. Unlike “Keep Austin Weird,” our early efforts lacked the “soul” of marketing, which we later identified—

The key to conversion has always been delighted customers.

In the past we trained and encouraged clients to become experts in many of the CRO disciplines. They got great results! The trouble was, no single siloed discipline was a decisive factor in consistently achieving better results.

We reflected on our nearly twenty years of CRO work. Some companies effortlessly adopted a culture of optimization. Others achieved wins but failed to absorb the lessons learned. Their CRO efforts were after-the-fact fixes; the dead end of many of today’s traditional CRO efforts.

Those companies that excelled evolved a culture of customer-centricity—not at the manager or director level, but in the C-suite. The best companies absorbed CRO learnings and incorporated them into operational changes. That made them superior, not just at fixing, but at creating relevant customer experiences, including marketing campaigns, customer flows and meaningful content.

One of the privileges of working through CRO challenges with diverse organizations over the years was learning that no matter the industry, business size or product, the only story that truly matters is the story that your delighted customers tell about your company—not the one you tell your customer.

We started BuyerLegends.com in the great weird city of Austin to help companies learn how to use the soulful art of storytelling to create customer-centered, data-driven customer experience design, supported by the only story that matters.

Peep Laja

I used to run an SEO agency. When I got my clients to rank #1 for a number of keywords—which, by the way, wasn’t very hard in 2007—I noticed they weren’t necessarily making more money.

That was clue #1.

Then I did a bunch of direct response marketing for information products.

I learned that whoever converts the best can spend the most on traffic.

That was clue #2.

My last SaaS startup failed, I ran out of ideas, and didn’t have a structured process to grow the business. I felt there must be a better way to build businesses. That’s when I discovered Lean Startup.

That was clue #3.

While all of that was taking place, I fell in love with a girl from Austin and ended up moving here. When I started to self-identify as a conversion guy (~2011), I noticed I was surrounded by others who do the same. Total coincidence.

Brett Hurt

My parents were retailers from the time I was born, and my dad was a direct marketer and inventor of the first halogen fishing light. I grew up working in my parents’ store. I also grew up as a programmer, starting at age 7 and programming over 40 hours a week from age 7 to 21.

In 1998, my wife and I launched an online retailer named BodyMatrix, leveraging an eCommerce platform that I had built from scratch over the summer (in between school years at Wharton, where I was earning my MBA). We were thrilled to be getting orders all over the world, from US military bases to the UK.

But we were flying blind. Working in my parent’s stores and helping my dad market his products had gotten me used to understanding customer behavior. I had written my Wharton leadership study on Sam Walton, based on his book Made in America. A key part of Walmart’s early success was understanding customer behavior in their stores.

With all of the advantages of the Internet, it had a huge disadvantage: You couldn’t see anything.

So

I set out to build one of the world’s first Web analytics solutions.

As a student, I couldn’t afford to buy one and the cheap ones (like WebTrends) were very poor, offering only high-level page view counts. I needed to know what was happening within our online store—to at least recreate what I knew about our customers at my parents’ stores.

The lightbulb moment came when I realized I could know even more.

I told my parents how my wife and I had developed the “biggest brain” with the ability to remember all customer behavior. And it worked—we almost doubled our conversion ratio (from 2% to 3.8%) in three months.

This attracted the attention of a PhD student at Wharton who formed his dissertation on the data we had collected and how we could personalize based on it.

I thought this was novel, but I didn’t know how novel it really was. I went to friends, Wharton grads who worked at CDnow and Amazon. To my shock, they urged me to form a business around this.

That’s how Coremetrics was born. I sold my other businesses, including my Web consulting business and BodyMatrix, and put all of my chips on Coremetrics.

We moved the business to Austin, where I was able to attract many fantastic developers, product managers, and client services personnel. We built a global company, became an industry standard in retail, and today IBM owns the company and bases its popular holiday retail benchmarking reports off the foundation of Coremetrics’ analytics and data.

Roger Dooley

I was a data-driven marketer and entrepreneur long before the Internet was popular.

In the early days of home computers, I left a senior strategy position at a Fortune 1000 company and co-founded a catalog business. We did things like square-inch analysis to evaluate products and their positioning, A/B testing to measure the effect of different cover designs, and more. We even did predictive modeling for our call center.

Later, I transitioned to the digital space with a focus on SEO, community building, and conversion. My focus on both quantitative metrics and human behavior helped me build College Confidential, which generates tens of millions of page views per month and ranks as the busiest college-bound website.

Today, I focus on turning scientific research into useful business strategies.

Austin is a great place to work from—there’s a huge tech community, lots of entrepreneurism, and a world-class university. On the non-business side, there’s no snow to shovel, the restaurant and music scenes are diverse, and the breakfast tacos are amazing!

Yuan Wright

I started my career in investment banking and corporate finance. By luck, I was at a large corporation, where you can easily move to different departments. I landed in digital analytics and never looked back!

Immediately, I realized this was what I was made to do. In traditional offline marketing, it’s hard to optimize conversions. But in digital marketing, you have the tools and technology to see the big picture and understand the entire ecosystem of how you’re interacting with the customer.

I truly love analytics because it gets you close to the customer.

Every click, every transaction helps you understand the customer better. It’s also very concrete. A/B testing, for example, only has 3 outcomes: you help business, you don’t help business, or it doesn’t matter. The numbers tell you everything and enable you to make solid business decisions.

At EA, I use data to understand how we can optimize the multi-channel platforms to support customers through their entire life cycle: acquisition, conversion, growth and support. The goal? A frictionless journey along the way

It’s hard to imagine doing this anywhere but Austin. This truly is the Conversion Capital of the World. It’s a digital hub that attracts top tech folks, and it offers culture, diversity, and opportunities for intelligent exchange. I love it, and I’m honored to be here.

Neil Iscoe

As CEO of Digital Certainty (now Sentient Ascend), I help businesses stay creative by providing a system that does the hard work of optimizing their landing pages for them. Digital Certainty helps marketers become more productive by using machine-learning to more quickly produce better results.

I’ve been in Austin a long time, receiving my PhD in Computer Sciences from UT in 1985, creating a product development lab for HP and, prior to founding Digital Certainty, being responsible for commercializing the results of the $550M of annual research performed by the University of Texas.

I’ve always been interested in data, but I realized its power when I was in graduate school.

I wanted to know what ±3% really meant.

So I attended a summer program at the University of Michigan, the home of survey sampling, and it evolved from there.

That was a really long time ago, but data is still at the core of what we do. It’s such a cool area, where you can use data to bring about real results.

Austin’s diverse talent base dovetails with the multi-disciplinary nature of CRO. Austin’s energy, rather than producing multitudes of largely indistinguishable apps, is like CRO: focused on results.

There’s remarkable unanimity of purpose and support. Technology companies can take advantage of an active ecosystem that includes incubators, city council, university, SXSW, chamber of commerce, and multiple support organizations.

And, of course, we’ve got the best breakfast tacos in the world.

Kate Morris

Data-driven marketing was something I was interested in when I started at the University of Texas. I got my BBA in Marketing and my specialization (via electives) in Customer Relationship Management. It’s similar to what I ended up in, but the online world was just getting going at that time.

Even then, though, I could see that having the data to back up plans of growth was imperative. I got into the online marketing world and have not looked back.

It’s an industry built on data and measurement. It was so great to learn that online marketing could do what marketers had such a hard time with in the past: measure impact. It’s still not perfect by any means, but

I love being able to show results and test theories.

I spent 4 years in Seattle, but my home has always been here. The Austin community is so supportive—and rooted in data, conversations, and development. We have some of the best conversion-based minds, and I am proud to call some of them friends.

Kenneth Cho

Prior to People Pattern, I founded a company called Spredfast, a social media management platform for the enterprise.

The Harry Ransom Center at the University of Texas approached us to integrate social media into an awareness campaign for an Edgar Allen Poe exhibit. We initially targeted traditional literary societies and book clubs around central Texas, but I came to notice a large segment of high school girls—more specifically “Goth” girls—leading the conversation.

These girls all had one thing in common: They were social followers of Marilyn Manson, who was a vocal admirer of Edgar Allen Poe.

I suggested the HRC target ads at Facebook followers of Goth bands—and foot traffic to the exhibition tripled.

I started to recognize a shift in importance from understanding the conversation to understanding who is behind the conversation and teamed up with UT Professor of Computational Linguistics Jason Baldridge to found People Pattern.

People Pattern is a SaaS platform that helps brands identify and activate psychographic segments via semi-supervised machine learning and natural language processing. There is no longer an alternative to data-driven marketing. Understanding existing and desired customers through brand data is paramount for marketers.

The combination of digital marketing software companies and the University of Texas makes Austin a hotbed of technology and up-and-coming talent, and hiring the best and brightest to uncover and scale audience insights will enable our platform to maintain its competitive edge.

Leslie Mock

Launching one of the first public online colleges tossed me headlong into online marketing. Filling classes was crucial and new tools like email marketing became an amazing way to run campaigns, easily track what was happening and tweak things to get better and better results.

Cool. No more months of creating print ads and wondering if anyone ever saw them.

I then spearheaded online marketing for other businesses and organizations—

I was sold when we saved one company hundreds of thousands of dollars in less than a year

by shifting to data-driven marketing. No more “marketing to everyone and hoping it works”.

Along the way, I started a natural product line, an ecommerce business, and a media company that works with clients to get great marketing results by funneling their big vision into measurable outcomes: All possible because of the magic of data-driven marketing.

(I’m also an introverted entrepreneur and started a community to share tips and advice. You can join us at TheInnerpreneur on Facebook).

Austin is the home to SXSW Interactive, a plethora of entrepreneurs and creative start-ups. The best data-driven marketers live here and so do I. How lucky is that?

Ethan Luke Stenis

I was drawn to Austin for the reputable advertising graduate program at the University of Texas, but I stayed for the music scene and food trucks.

Upon graduation, I burst out of UT Advertising graduate program with the ambitious goal of making Super Bowl commercials by the dozen, but once I experienced the traditional ad agency life, I realized it wasn’t my style.

I knew data-driven marketing was important once I began working as a direct response copywriter at an Austin digital marketing agency. Using SEO/SEM best practices and a dynamic analytical toolbox, I learned how to create actionable copy, track and interpret traffic stats, increase engagement, and optimize conversion rates.

It was a warm, sunny morning, and I was drinking some rich, Colombian coffee when it hit me:

If content is king, then data is the emperor.

Once I cleaned up the coffee I spit out and apologized to the rest of the meeting members for the unexplained interruption, I smiled to myself, re-calibrated my career goals, and began down the road to mastering all that is data.

Jane Dueease

I got started in data driven marketing in college, while working on the golf course as the beer cart girl.

You have a lot of time to think when you are a beer cart girl. And I thought a lot about how to make more $$ from my customers.

There are only 18 holes on a golf course, and each team can have a max of 8 people at a time. It takes 4 hours on average to play a round of golf, so for an 8-hour shift on the course, I maxed out at 288 customers.

That sounds like a lot. But most golfers are not drinking beer and they can only drink so many sodas. Worse, they would often stop and go to the bathroom after the first nine and grab a drink/snack at the bar, leaving me with less money. So I had to create ways to fix this.

I got approval to bring liquor onto the beer cart, along with fresh sandwiches, hot coffee, hot chocolate, better snacks and sweets. I tried everything! Each change I would document and report back to the GM.

Again I had a lot of time on my hands.

Then I started to personalize my cart based on who was playing that day. Over time I got to know each members’ needs and wants, and I’d add Coors Light for Mr. Smith or 16-ounce cans of Miller Lite for Mr. Jones.

Each change, each customer, I documented as well as my earnings. I got my GM to pull beer cart sales from the prior year—and that’s when I learned that I had tripled the sales from the prior year.

My little “book” became my bible—even the pro’s wanted in. I kept more data on their customers than they did. I loved it.

I made more money, my customers were happy, my boss was happy and I knew what everyone wanted.

I think about those days, circling the course again and again, and the data (customers) sitting right in front of me all the time. It is why I love data today. Some of it is so simple yet so powerful.

I pulled basic sales data from a recent ecommerce customer: a simple heat map of sales by day of the week, and time of day. I wanted to know what their best-selling day and time was. As it turns out, 25% of their sales came on Sunday from 5 to midnight. Guess when they took the site down for maintenance/ upgrades/ launches?

Yep. On Sunday nights after 9 pm.

After presenting the data, we moved the maintenance windows to 6 am–10 am on Monday, our slowest sales day and time. Everyone was happier—especially the IT folks who got to launch during normal business hours.

Data is powerful. It tells you what your customers want (Coors Light), when they want it (Sunday nights) and so much more. Through testing, you can take opinion (the VP loves blue buy buttons) out of the equation and replace it with what the customers want (green buy buttons), turning marketing into the sales machine is should be.

Now how does Austin fit into this? I moved to Austin because I wanted to be back in Texas (I’m from Houston originally), and it was the only city I wanted to live in. Austin is the perfect location because it is such a cutting edge blend of IT, Entrepreneurs, and the universities.

Conversion as a science (vs. personal opinion) is only now gaining traction. Austin is open to it because it loves all things weird, and some of the lessons learned from looking at conversion data are weird. Just like Austin.

Kevin Koym

I founded Tech Ranch in 2008 on the idea that I could provide incubation education to entrepreneurs by focusing on the individuals instead of the startup itself.

This decision was rooted in my mission to provide a platform to impact social good.

Prior to that, I had learned that there was a gap in the market and used market-driven data to discover where the “sweet spot” would exist for a new company. I learned that entrepreneurs could easily exchange equity for dollars, but that didn’t help them improve themselves or their prospects for success after exciting their current venture.

My team and I realized that Austin was the perfect place to marry the desire to focus on the triple bottom line while still helping pioneers impact global change. Austin provides a unique community that supports all industries and works together to help startups reach their goals. Seasoned successes are always willing to share their data and experience with those new to the scene.

Therefore, as we continue to educate entrepreneurs, we help them realize that by using data—driven by research and market insights—they can avoid “reinventing the wheel”. We encourage the use of both secondary and primary research to help them narrow down their target marketing, position themselves against the competition and prepare for investor due diligence.

Mani Iyer

I’m a serial entrepreneur, startup advisor, and industry speaker on demand generation, pipeline acceleration, cross-channel marketing, B2B marketing and advertising. I’ve spoken at leading industry conferences including Ad-Tech, Leadscon, Conversion Conference, Online Marketing Summit, DemandCon, TiECon, and Startup Summit. Before that, I was a marketing consultant to VC-backed start-ups and founded a software business acquired by Oracle/PeopleSoft.

Now, as CEO of Kwanzoo, a cloud-based platform for full-funnel retargeting and personalized display campaigns, it really gets me when marketers don’t do their homework, throwing out yet another “off message,” irrelevant ad in that fleeting moment when they finally have someone’s attention.

This just has to change!

Life is too short. And it’s moving faster every day. There are better ways to spend our time, both at home and at work.

All marketers have to do is “connect the data dots” to avoid irrelevant messaging.

That’s why I love data-driven marketing. It’s the only thing that can propel that change.

Why Austin?

Gartner says CMOs will soon overtake CIOs in the enterprise. Foundation Capital, a leading Bay Area VC firm predicts a 10X growth in marketing technology over the next 10 years. Here at Kwanzoo, we were convinced we needed to look beyond Silicon Valley as we grew our Customer Success, Sales and Marketing teams.

With its lower costs, high quality of life, music, culture, outdoors, the UT system and more, it’s been a great move for us. We are thrilled to be a part of the vibrant Austin technology and entrepreneurial ecosystem.

Charles Hua

I didn’t start out my career as a marketer. As an engineer, I always thought marketers were a bunch of party animals, that a quants person like me would not fit in with that crowd.

It wasn’t until I joined the CRM group at Dell’s Austin headquarters that

I learned there is a nerdy side to marketing.

I started out testing covers, formats and paper thickness when I was at the direct marketing department at Dell.

That’s the point at which I learned marketing is actually about numbers as much as about gut feelings and creativity. The digital revolution really sped up this process of experimentation. It provides much richer data sets, segmentation and personalization, providing all kinds possibilities to respond to our customers.

Even if I weren’t a number-nerd, I’d tell you data-driven decision-making through experimentation is the key to gain a competitive edge.

Austin matters to me primarily because it’s my home. I graduated from UT and have lived here ever since. Who wouldn’t want to? The food is awesome.

Justin Rondeau

On a January morning early in my career as a marketer in software, the CEO of the company told me that I needed to increase revenues. I asked “How?”, and

I was quickly reminded that it was my job to increase revenues, not his.

Kind of a bummer statement, especially since I had very little control over the website at the time.

We had a fairly large, but unresponsive email list, so I dug through the data to develop a new campaign and split tested along the way. I presented my findings, and this gave me the chance to start optimizing the website—the place that was originally off limits to me!

Data was the key to growing my career, and every campaign I created made it easy to point to the causal relationship between the changes we made and the revenues we gained.

I was hooked and never looked back.

I’m not a full-time Austinite yet—I come down from Boston for a week each month, so it is really my second home (Austin… Boston… they even sound alike). But I feel completely at home here. I love the food, my team at DM, the music, and the impromptu conversion meetups at local bars with some of the best in the industry.

Chris Mercer

As co-owner of Seriously Simple Marketing, I work with top direct response marketers from around the world, improving revenues as they optimize funnel by funnel.

I knew the data-driven approach was for me when I realized just how much Google Analytics could bring to the table.

There is a disconnect for most marketers between the ‘out-of-the-box’ Google Analytics default install and what’s actually possible once you know how to set it up properly. When I learned how to do that, it opened up a whole new world!

I’ve been helping to spread the word to other optimizers ever since and recently created a course (called Seriously Simple Analytics) to help marketers use tools like Google Analytics & Google Tag Manager properly, so they can move from “guessing what works” to “measuring what works”.

Why Austin? We’ve lived in places like San Diego, Portland, Seattle and New York… but nothing beats Austin!

Microbrews, hiking in the greenbelt, and an entrepreneurial electricity that you can’t help but feel in the air

, are just some of the things that first drew my wife and me to this incredible city.

We now call Austin home because of the incredible group of talented people we’ve had the pleasure of meeting and working with. There’s just nothing like it. (Wait… did I mention the microbrews?!?)

Craig Tomlin

With 20 years of experience on both the client-side and the agency-side, I’ve grown revenue for firms like BlackBerry, Blue Cross Blue Shield, Disney, and IBM.

I learned that data-driven marketing was important while an Account Director at a local agency, where analytics and engagement data coupled with behavioral data from usability testing allowed me to grow client revenue by 300% for key accounts. It probably also helped win two Marketo Revvies and a Killer Content Award from Content Marketing Institute.

It was the combination of data and analytics audits — the “what’s happening” data

— coupled with the behavioral data coming from usability testing, that made the big difference. Using both gave a much clearer picture, not only of what was happening on a website, but why it was happening.

Armed with that intelligence, it’s easy to formulate entirely new hypotheses for testing, using an A/B approach that can be far more effective, giving much bigger increases in conversion.

Why Austin?

I moved here from Southern California at the height of the recession because I had three job offers in Texas: one in Houston, one in San Antonio and one in Austin. After visiting all three, it was apparent that, with its small yet dynamic and entrepreneurial atmosphere, it’s hill country with lakes all around, and the exceptional schools for my family—Austin was the best place for us.

And with over 100 people moving to Austin every day I guess I’m not alone in that decision!

Austin, the perfect environment for CROs

If you always thought conversion rate optimization was only for number-nerds, I hope you’re convinced otherwise. Sure, there’s a lot of data that goes into the process, but there’s another side to the optimization game.

Several of our experts commented on the “other” side of data—meeting the needs of their best customers. The Eisenbergs went so far as to call this the “soul” of marketing.

Ultimately, though optimizers work with numbers, they’re marketing to people. And they know it.

[ The data simply helps them improve their ability to connect with those people—even to the point of delighting them.

Perhaps that’s why other tech or marketing centers aren’t measuring up to Austin. Here, you have the cultural diversity and creativity to bring data and people together.

Sure, Austin is a cluster of conversion optimizers. But here, they also have a life.

Going Mobile for Mobile Conversions [Hello Chicago]

News & EventsIf you live in Chicago, we’re bringing one of our most important presentations right to you.

If you don’t live in Chicago, may I suggest you get that Ford Fairlane lubed and tuned up for a road trip. You’ll want to be there on June 2.

We’re going mobile to spread the results of our testing on the mobile web. It’s one of the most important presentations we’ve done because the mobile web is changing fast.

Conversion Sciences is Road Tripping to Chicago June 2.

We know a thing or two about your mobile marketing. Your Mobile traffic is probably one of your fastest growing segments. It converts at depressingly low rates. You have probably decided to focus your efforts on the desktop for now.

We were there once, too.

Come see the most interesting and lucrative things we’ve learned about mobile conversions from tests across industries. You’ll learn you how to avoid common conversion-killing “mobile best practices”, write CTAs that get mobile visitors to take action and employ simple UX tricks that will keep those CTAs constant without distracting or irritating visitors.

You’ll also get tips for bridging the 1st screen to 2nd screen gap, maximizing phone leads from mobile visitors and building forms that mobile visitors will actually complete.

You’ll leave this sessions equipped to make smarter decisions about your mobile experience.

We Get a Special Discount

We get a special discount since we’ve got the awesome wheels. Don’t tell our hosts at Unbounce that we’re sharing this code with you.

conversionsciencessentme

You better sign up before they get wise. This code lets you in the door for $149.50. That’s 50% off the already ridiculous price. You can use it here. Yes, it’s a damn long discount code. Copy it to your clipboard.

Did I Mention the Other Seven Awesome Speakers?

No? Well you can’t beat them. You should check them out after you’ve registered to see us.

We’re worth the $149.50 admission, but you also get these bright people.

Come see us in Chicago, or wait to see these great speakers at one of the overblown and expensive conferences in some far away city. Your choice.

See Me at Conversion World and Keep Your Pajamas On

News & EventsThere’s a formula for your landing pages that guide you to to get the reaction you’re aiming for. You’re paying for the traffic: now’s your chance to get the most from it.

The first ever online C

RO conference is fast-approaching, and if you want that critical formula for high-converting landing pages (and of course you do), request an invite to the Conversion World Conference happening April 20-22.You’ll never be able to learn from a more diverse, interesting and knowledgeable group of international conversion experts. Ever.

You don’t even have to change out of your pajamas. It’s all online. Invite some of your geeky friends over. Pop popcorn. Invent a Conversion World drinking game.

For my part, I’ll be sharing my landing page formula. You’ll get actionable tips and best practices to create the result-driven landing pages you need. I’ll also review several of your landing pages live and make suggestions on changes and improvements that will have an immediate impact.

What You’ll Learn

Register for this three day extravaganza of conversion optimization goodness without leaving your computer.

Unalytics: Unconventional Analytics to Guide Your Decisions

Conversion Marketing StrategyWhat kind of person is “good” at analytics? It’s time to change our opinion of what a “data scientist” is. If you are involved in the care and feeding of an online marketing endeavor, you have to be good at this “data thing.”

Fortunately, this doesn’t mean that you put on your lab coat and pocket protector and spend endless hours combing through data, charts and spreadsheets.

That IS the job of the data scientist. No, you are more of a data detective, finding data to guide your decisions in unexpected places.

Subscribe to Podcast

Check out the podcast or column for the full list.

Austin is the Conversion Capital of the World in 2015

News & EventsLast year, Conversion Sciences and I teamed up to prove that Austin, Texas, is the Conversion Capital of the World.

While some may disagree, evidence still points to this unique claim to fame for the capital city of Texas.

For those who aren’t sure where Austin is, we’re providing a map.

Conversion Optimization Experts and Austin: Weirdly Connected

At first glance, conversion optimizers can seem like an elite group. Even for marketers who know they should be testing, the practice of hypothesizing and measuring feels like it should be reserved for brainy number-nerds.

But if you look closely, there’s an incredible diversity among conversion experts.

True, some of them were doing advanced calculations before most of us could count, but many got their start in unlikely places: advertising, SEO and even the golf course.

What’s most interesting is that they seem to be drawn to this one location: a cluster of entrepreneurial, music-loving, taco-eating, results-oriented marketers.

Noah Kagan, a transplant from the Silicon Valley explains the phenomenon in this TechCrunch video. He says people come to visit and realize, “Wow! This is a great culture: good lifestyle, affordable, the people are friendly… .”

That explains why they’re drawn here, but one of the reasons they stay (and flourish) is the very thing that makes Austin unique.

The city’s tag line is “Keep Austin weird.” Its hallmark: diversity. This is a place that rewards creativity and out-of-the-box thinking. So it’s the perfect place for smart people to make their mark and still enjoy life.

“In Austin, we wanna have lives,” says Noah. And apparently, a lot of smart conversion optimizers would echo that sentiment.

Like it or not, agree or not, Austin has become prime real estate for conversion rate optimizers. Last year, we asked local experts “why Austin?” This year, we decided to get a little more personal.

Here’s what they told us.

Brian Massey

Conversion Sciences | @bmassey

I was trained as a computer scientist, and wound my way through sales and into marketing. Through this journey, data-driven marketing came pretty naturally to me. I was a marketer with a scientist’s training. I wasn’t going to settle for guessing about what worked on the Web.

In 2001 I wrote my own analytics package, which I think you can still find on SourceForge.net. However, my employers didn’t really know what to make of the marketing guy who was spending his time coding. At the time, there wasn’t a word or a discipline or an industry for what I was doing. I had a hard time describing the method to my madness.

It was a “Wizards of the Web” seminar by Bryan and Jeffery Eisenberg (see below) that sealed it for me. They showed me how science and marketing could work as one.

In 2007, I started Conversion Sciences and began teaching the world about this new set of disciplines. Many businesses get it now, and we have been having a lot of fun understanding crazy people they call their visitors.

How does Austin play into this? Aside from the unusual business school, the Wizard Academy here, Austin has the “Creative Class” we need to make websites awesome. When you combine low cost of living with high-value talent, it’s a great place to run a conversion optimization company.

Joel Harvey

Conversion Sciences | @JoelJHarvey

My first experience with using data to make money came when I was ten years old.

by understanding the relationship of those statistics and the market value of a particular player or card and leveraging that knowledge in trade negotiations with other collectors.

There was nothing more thrilling than using insights from data to engage in a profitable trade. By the time I lost interest in sports cards (about three years later), I had turned several hundred dollars into a collection worth several thousand dollars.

I was hooked.

A handful of years later I started building and monetizing websites. This was before Google Website Optimizer or any early A/B testing tools, but I knew that in order to maximize profits, I would need to try different types of content.

Essentially that meant running pre/post tests and keeping a sharp eye on the bottom line to see if it was moving in the right direction. I quickly realized how powerful (and profitable) even small changes to a page could be and have been hooked on optimization ever since.

How Austin plays into this: About eight years ago my digital marketing career was really starting to blossom. However, I was living in Albuquerque, New Mexico at the time and found that there was a lack of mentors, collaborators and overall talent for me to tap into. I needed to move somewhere that had a thriving digital ecosystem and, after deep analysis, Austin topped the list. I haven’t looked back since.

Jeffrey and Bryan Eisenberg

Buyer Legends, IdealSpot | @JeffreyGroks, @TheGrok

After almost two decades in the CRO game, we moved to Austin, the city whose businesses live by the slogan “Keep Austin Weird”. The move turned out to be a catalyst for many positive changes, personally and professionally.

Austin has truly become the CRO capital of the world. It is full of brilliant CRO practitioners. Over beers a few years ago, our colleagues inspired us to look for a better solution to shared frustrations.

“Keep Austin Weird” had been started to promote local businesses, those who deeply understand and strive to delight Austin’s population. These businesses are fiercely committed to succeed despite the advantages offered by the homogeneity of large national chains.

Bryan and I have worked together with a prestigious list of blue-chip clients to achieve well over $2 Billion in CRO wins. We have trained thousands of people in CRO and written several books, including two New York Times best sellers about CRO.

Nevertheless we share the frustration that CRO, as too many companies practice it today, is a dead end.

It was in Austin that we shifted our focus away from traditional CRO. We pioneered CRO as a study of tactics to increase conversions. Unlike “Keep Austin Weird,” our early efforts lacked the “soul” of marketing, which we later identified—

In the past we trained and encouraged clients to become experts in many of the CRO disciplines. They got great results! The trouble was, no single siloed discipline was a decisive factor in consistently achieving better results.

We reflected on our nearly twenty years of CRO work. Some companies effortlessly adopted a culture of optimization. Others achieved wins but failed to absorb the lessons learned. Their CRO efforts were after-the-fact fixes; the dead end of many of today’s traditional CRO efforts.

Those companies that excelled evolved a culture of customer-centricity—not at the manager or director level, but in the C-suite. The best companies absorbed CRO learnings and incorporated them into operational changes. That made them superior, not just at fixing, but at creating relevant customer experiences, including marketing campaigns, customer flows and meaningful content.

One of the privileges of working through CRO challenges with diverse organizations over the years was learning that no matter the industry, business size or product, the only story that truly matters is the story that your delighted customers tell about your company—not the one you tell your customer.

We started BuyerLegends.com in the great weird city of Austin to help companies learn how to use the soulful art of storytelling to create customer-centered, data-driven customer experience design, supported by the only story that matters.

Peep Laja

ConversionXL | @peeplaja

I used to run an SEO agency. When I got my clients to rank #1 for a number of keywords—which, by the way, wasn’t very hard in 2007—I noticed they weren’t necessarily making more money.

That was clue #1.

Then I did a bunch of direct response marketing for information products.

That was clue #2.

My last SaaS startup failed, I ran out of ideas, and didn’t have a structured process to grow the business. I felt there must be a better way to build businesses. That’s when I discovered Lean Startup.

That was clue #3.

While all of that was taking place, I fell in love with a girl from Austin and ended up moving here. When I started to self-identify as a conversion guy (~2011), I noticed I was surrounded by others who do the same. Total coincidence.

Brett Hurt

Bazaarvoice, Coremetrics | @bazaarbrett

My parents were retailers from the time I was born, and my dad was a direct marketer and inventor of the first halogen fishing light. I grew up working in my parents’ store. I also grew up as a programmer, starting at age 7 and programming over 40 hours a week from age 7 to 21.

In 1998, my wife and I launched an online retailer named BodyMatrix, leveraging an eCommerce platform that I had built from scratch over the summer (in between school years at Wharton, where I was earning my MBA). We were thrilled to be getting orders all over the world, from US military bases to the UK.

But we were flying blind. Working in my parent’s stores and helping my dad market his products had gotten me used to understanding customer behavior. I had written my Wharton leadership study on Sam Walton, based on his book Made in America. A key part of Walmart’s early success was understanding customer behavior in their stores.

With all of the advantages of the Internet, it had a huge disadvantage: You couldn’t see anything.

So

As a student, I couldn’t afford to buy one and the cheap ones (like WebTrends) were very poor, offering only high-level page view counts. I needed to know what was happening within our online store—to at least recreate what I knew about our customers at my parents’ stores.

The lightbulb moment came when I realized I could know even more.

I told my parents how my wife and I had developed the “biggest brain” with the ability to remember all customer behavior. And it worked—we almost doubled our conversion ratio (from 2% to 3.8%) in three months.

This attracted the attention of a PhD student at Wharton who formed his dissertation on the data we had collected and how we could personalize based on it.

I thought this was novel, but I didn’t know how novel it really was. I went to friends, Wharton grads who worked at CDnow and Amazon. To my shock, they urged me to form a business around this.

That’s how Coremetrics was born. I sold my other businesses, including my Web consulting business and BodyMatrix, and put all of my chips on Coremetrics.

We moved the business to Austin, where I was able to attract many fantastic developers, product managers, and client services personnel. We built a global company, became an industry standard in retail, and today IBM owns the company and bases its popular holiday retail benchmarking reports off the foundation of Coremetrics’ analytics and data.

Roger Dooley

NeuroScience Marketing | @rogerdooley

I was a data-driven marketer and entrepreneur long before the Internet was popular.

In the early days of home computers, I left a senior strategy position at a Fortune 1000 company and co-founded a catalog business. We did things like square-inch analysis to evaluate products and their positioning, A/B testing to measure the effect of different cover designs, and more. We even did predictive modeling for our call center.

Later, I transitioned to the digital space with a focus on SEO, community building, and conversion. My focus on both quantitative metrics and human behavior helped me build College Confidential, which generates tens of millions of page views per month and ranks as the busiest college-bound website.

Austin is a great place to work from—there’s a huge tech community, lots of entrepreneurism, and a world-class university. On the non-business side, there’s no snow to shovel, the restaurant and music scenes are diverse, and the breakfast tacos are amazing!

Yuan Wright

Electronic Arts (EA)

I started my career in investment banking and corporate finance. By luck, I was at a large corporation, where you can easily move to different departments. I landed in digital analytics and never looked back!

Immediately, I realized this was what I was made to do. In traditional offline marketing, it’s hard to optimize conversions. But in digital marketing, you have the tools and technology to see the big picture and understand the entire ecosystem of how you’re interacting with the customer.

Every click, every transaction helps you understand the customer better. It’s also very concrete. A/B testing, for example, only has 3 outcomes: you help business, you don’t help business, or it doesn’t matter. The numbers tell you everything and enable you to make solid business decisions.

At EA, I use data to understand how we can optimize the multi-channel platforms to support customers through their entire life cycle: acquisition, conversion, growth and support. The goal? A frictionless journey along the way

It’s hard to imagine doing this anywhere but Austin. This truly is the Conversion Capital of the World. It’s a digital hub that attracts top tech folks, and it offers culture, diversity, and opportunities for intelligent exchange. I love it, and I’m honored to be here.

Neil Iscoe

Digital Certainty | @DCertainty

As CEO of Digital Certainty (now Sentient Ascend), I help businesses stay creative by providing a system that does the hard work of optimizing their landing pages for them. Digital Certainty helps marketers become more productive by using machine-learning to more quickly produce better results.

I’ve been in Austin a long time, receiving my PhD in Computer Sciences from UT in 1985, creating a product development lab for HP and, prior to founding Digital Certainty, being responsible for commercializing the results of the $550M of annual research performed by the University of Texas.

I’ve always been interested in data, but I realized its power when I was in graduate school.

So I attended a summer program at the University of Michigan, the home of survey sampling, and it evolved from there.

That was a really long time ago, but data is still at the core of what we do. It’s such a cool area, where you can use data to bring about real results.

Austin’s diverse talent base dovetails with the multi-disciplinary nature of CRO. Austin’s energy, rather than producing multitudes of largely indistinguishable apps, is like CRO: focused on results.

There’s remarkable unanimity of purpose and support. Technology companies can take advantage of an active ecosystem that includes incubators, city council, university, SXSW, chamber of commerce, and multiple support organizations.

And, of course, we’ve got the best breakfast tacos in the world.

Kate Morris

Outspoken Media | @katemorris

Data-driven marketing was something I was interested in when I started at the University of Texas. I got my BBA in Marketing and my specialization (via electives) in Customer Relationship Management. It’s similar to what I ended up in, but the online world was just getting going at that time.

Even then, though, I could see that having the data to back up plans of growth was imperative. I got into the online marketing world and have not looked back.

It’s an industry built on data and measurement. It was so great to learn that online marketing could do what marketers had such a hard time with in the past: measure impact. It’s still not perfect by any means, but

I spent 4 years in Seattle, but my home has always been here. The Austin community is so supportive—and rooted in data, conversations, and development. We have some of the best conversion-based minds, and I am proud to call some of them friends.

Kenneth Cho

People Pattern | @chonuff

Prior to People Pattern, I founded a company called Spredfast, a social media management platform for the enterprise.

The Harry Ransom Center at the University of Texas approached us to integrate social media into an awareness campaign for an Edgar Allen Poe exhibit. We initially targeted traditional literary societies and book clubs around central Texas, but I came to notice a large segment of high school girls—more specifically “Goth” girls—leading the conversation.

I suggested the HRC target ads at Facebook followers of Goth bands—and foot traffic to the exhibition tripled.

I started to recognize a shift in importance from understanding the conversation to understanding who is behind the conversation and teamed up with UT Professor of Computational Linguistics Jason Baldridge to found People Pattern.

People Pattern is a SaaS platform that helps brands identify and activate psychographic segments via semi-supervised machine learning and natural language processing. There is no longer an alternative to data-driven marketing. Understanding existing and desired customers through brand data is paramount for marketers.

The combination of digital marketing software companies and the University of Texas makes Austin a hotbed of technology and up-and-coming talent, and hiring the best and brightest to uncover and scale audience insights will enable our platform to maintain its competitive edge.

Leslie Mock

Idea Pioneer Media, TheInnerpreneur | @LeslieMock

Launching one of the first public online colleges tossed me headlong into online marketing. Filling classes was crucial and new tools like email marketing became an amazing way to run campaigns, easily track what was happening and tweak things to get better and better results.

Cool. No more months of creating print ads and wondering if anyone ever saw them.

I then spearheaded online marketing for other businesses and organizations—

by shifting to data-driven marketing. No more “marketing to everyone and hoping it works”.

Along the way, I started a natural product line, an ecommerce business, and a media company that works with clients to get great marketing results by funneling their big vision into measurable outcomes: All possible because of the magic of data-driven marketing.

(I’m also an introverted entrepreneur and started a community to share tips and advice. You can join us at TheInnerpreneur on Facebook).

Austin is the home to SXSW Interactive, a plethora of entrepreneurs and creative start-ups. The best data-driven marketers live here and so do I. How lucky is that?

Ethan Luke Stenis

Street Authority | @elstenis

I was drawn to Austin for the reputable advertising graduate program at the University of Texas, but I stayed for the music scene and food trucks.

Upon graduation, I burst out of UT Advertising graduate program with the ambitious goal of making Super Bowl commercials by the dozen, but once I experienced the traditional ad agency life, I realized it wasn’t my style.

I knew data-driven marketing was important once I began working as a direct response copywriter at an Austin digital marketing agency. Using SEO/SEM best practices and a dynamic analytical toolbox, I learned how to create actionable copy, track and interpret traffic stats, increase engagement, and optimize conversion rates.

It was a warm, sunny morning, and I was drinking some rich, Colombian coffee when it hit me:

Once I cleaned up the coffee I spit out and apologized to the rest of the meeting members for the unexplained interruption, I smiled to myself, re-calibrated my career goals, and began down the road to mastering all that is data.

Jane Dueease

Univar Environmental Services | @techjane

You have a lot of time to think when you are a beer cart girl. And I thought a lot about how to make more $$ from my customers.

There are only 18 holes on a golf course, and each team can have a max of 8 people at a time. It takes 4 hours on average to play a round of golf, so for an 8-hour shift on the course, I maxed out at 288 customers.

That sounds like a lot. But most golfers are not drinking beer and they can only drink so many sodas. Worse, they would often stop and go to the bathroom after the first nine and grab a drink/snack at the bar, leaving me with less money. So I had to create ways to fix this.

I got approval to bring liquor onto the beer cart, along with fresh sandwiches, hot coffee, hot chocolate, better snacks and sweets. I tried everything! Each change I would document and report back to the GM.

Again I had a lot of time on my hands.

Then I started to personalize my cart based on who was playing that day. Over time I got to know each members’ needs and wants, and I’d add Coors Light for Mr. Smith or 16-ounce cans of Miller Lite for Mr. Jones.

Each change, each customer, I documented as well as my earnings. I got my GM to pull beer cart sales from the prior year—and that’s when I learned that I had tripled the sales from the prior year.

My little “book” became my bible—even the pro’s wanted in. I kept more data on their customers than they did. I loved it.

I made more money, my customers were happy, my boss was happy and I knew what everyone wanted.

I think about those days, circling the course again and again, and the data (customers) sitting right in front of me all the time. It is why I love data today. Some of it is so simple yet so powerful.

I pulled basic sales data from a recent ecommerce customer: a simple heat map of sales by day of the week, and time of day. I wanted to know what their best-selling day and time was. As it turns out, 25% of their sales came on Sunday from 5 to midnight. Guess when they took the site down for maintenance/ upgrades/ launches?

Yep. On Sunday nights after 9 pm.

After presenting the data, we moved the maintenance windows to 6 am–10 am on Monday, our slowest sales day and time. Everyone was happier—especially the IT folks who got to launch during normal business hours.

Data is powerful. It tells you what your customers want (Coors Light), when they want it (Sunday nights) and so much more. Through testing, you can take opinion (the VP loves blue buy buttons) out of the equation and replace it with what the customers want (green buy buttons), turning marketing into the sales machine is should be.

Now how does Austin fit into this? I moved to Austin because I wanted to be back in Texas (I’m from Houston originally), and it was the only city I wanted to live in. Austin is the perfect location because it is such a cutting edge blend of IT, Entrepreneurs, and the universities.

Conversion as a science (vs. personal opinion) is only now gaining traction. Austin is open to it because it loves all things weird, and some of the lessons learned from looking at conversion data are weird. Just like Austin.

Kevin Koym

Tech Ranch Austin | @kkoym

I founded Tech Ranch in 2008 on the idea that I could provide incubation education to entrepreneurs by focusing on the individuals instead of the startup itself.

Prior to that, I had learned that there was a gap in the market and used market-driven data to discover where the “sweet spot” would exist for a new company. I learned that entrepreneurs could easily exchange equity for dollars, but that didn’t help them improve themselves or their prospects for success after exciting their current venture.

My team and I realized that Austin was the perfect place to marry the desire to focus on the triple bottom line while still helping pioneers impact global change. Austin provides a unique community that supports all industries and works together to help startups reach their goals. Seasoned successes are always willing to share their data and experience with those new to the scene.

Therefore, as we continue to educate entrepreneurs, we help them realize that by using data—driven by research and market insights—they can avoid “reinventing the wheel”. We encourage the use of both secondary and primary research to help them narrow down their target marketing, position themselves against the competition and prepare for investor due diligence.

Mani Iyer

Kwanzoo | @iyermani

I’m a serial entrepreneur, startup advisor, and industry speaker on demand generation, pipeline acceleration, cross-channel marketing, B2B marketing and advertising. I’ve spoken at leading industry conferences including Ad-Tech, Leadscon, Conversion Conference, Online Marketing Summit, DemandCon, TiECon, and Startup Summit. Before that, I was a marketing consultant to VC-backed start-ups and founded a software business acquired by Oracle/PeopleSoft.

Now, as CEO of Kwanzoo, a cloud-based platform for full-funnel retargeting and personalized display campaigns, it really gets me when marketers don’t do their homework, throwing out yet another “off message,” irrelevant ad in that fleeting moment when they finally have someone’s attention.

This just has to change!

Life is too short. And it’s moving faster every day. There are better ways to spend our time, both at home and at work.

That’s why I love data-driven marketing. It’s the only thing that can propel that change.

Why Austin?

Gartner says CMOs will soon overtake CIOs in the enterprise. Foundation Capital, a leading Bay Area VC firm predicts a 10X growth in marketing technology over the next 10 years. Here at Kwanzoo, we were convinced we needed to look beyond Silicon Valley as we grew our Customer Success, Sales and Marketing teams.

With its lower costs, high quality of life, music, culture, outdoors, the UT system and more, it’s been a great move for us. We are thrilled to be a part of the vibrant Austin technology and entrepreneurial ecosystem.

Charles Hua

RetailMeNot.com | @charleschenhua

I didn’t start out my career as a marketer. As an engineer, I always thought marketers were a bunch of party animals, that a quants person like me would not fit in with that crowd.

It wasn’t until I joined the CRM group at Dell’s Austin headquarters that

I started out testing covers, formats and paper thickness when I was at the direct marketing department at Dell.

That’s the point at which I learned marketing is actually about numbers as much as about gut feelings and creativity. The digital revolution really sped up this process of experimentation. It provides much richer data sets, segmentation and personalization, providing all kinds possibilities to respond to our customers.

Even if I weren’t a number-nerd, I’d tell you data-driven decision-making through experimentation is the key to gain a competitive edge.

Austin matters to me primarily because it’s my home. I graduated from UT and have lived here ever since. Who wouldn’t want to? The food is awesome.

Justin Rondeau

DigitalMarketer | @Jtrondeau

On a January morning early in my career as a marketer in software, the CEO of the company told me that I needed to increase revenues. I asked “How?”, and

Kind of a bummer statement, especially since I had very little control over the website at the time.

We had a fairly large, but unresponsive email list, so I dug through the data to develop a new campaign and split tested along the way. I presented my findings, and this gave me the chance to start optimizing the website—the place that was originally off limits to me!

Data was the key to growing my career, and every campaign I created made it easy to point to the causal relationship between the changes we made and the revenues we gained.

I was hooked and never looked back.

I’m not a full-time Austinite yet—I come down from Boston for a week each month, so it is really my second home (Austin… Boston… they even sound alike). But I feel completely at home here. I love the food, my team at DM, the music, and the impromptu conversion meetups at local bars with some of the best in the industry.

Chris Mercer

Seriously Simple Marketing | @mercertweets

As co-owner of Seriously Simple Marketing, I work with top direct response marketers from around the world, improving revenues as they optimize funnel by funnel.

I knew the data-driven approach was for me when I realized just how much Google Analytics could bring to the table.

There is a disconnect for most marketers between the ‘out-of-the-box’ Google Analytics default install and what’s actually possible once you know how to set it up properly. When I learned how to do that, it opened up a whole new world!

I’ve been helping to spread the word to other optimizers ever since and recently created a course (called Seriously Simple Analytics) to help marketers use tools like Google Analytics & Google Tag Manager properly, so they can move from “guessing what works” to “measuring what works”.

Why Austin? We’ve lived in places like San Diego, Portland, Seattle and New York… but nothing beats Austin!

, are just some of the things that first drew my wife and me to this incredible city.

We now call Austin home because of the incredible group of talented people we’ve had the pleasure of meeting and working with. There’s just nothing like it. (Wait… did I mention the microbrews?!?)

Craig Tomlin

WCT & Associates | @ctomlin

With 20 years of experience on both the client-side and the agency-side, I’ve grown revenue for firms like BlackBerry, Blue Cross Blue Shield, Disney, and IBM.

I learned that data-driven marketing was important while an Account Director at a local agency, where analytics and engagement data coupled with behavioral data from usability testing allowed me to grow client revenue by 300% for key accounts. It probably also helped win two Marketo Revvies and a Killer Content Award from Content Marketing Institute.

— coupled with the behavioral data coming from usability testing, that made the big difference. Using both gave a much clearer picture, not only of what was happening on a website, but why it was happening.

Armed with that intelligence, it’s easy to formulate entirely new hypotheses for testing, using an A/B approach that can be far more effective, giving much bigger increases in conversion.

Why Austin?

I moved here from Southern California at the height of the recession because I had three job offers in Texas: one in Houston, one in San Antonio and one in Austin. After visiting all three, it was apparent that, with its small yet dynamic and entrepreneurial atmosphere, it’s hill country with lakes all around, and the exceptional schools for my family—Austin was the best place for us.

And with over 100 people moving to Austin every day I guess I’m not alone in that decision!

Austin, the perfect environment for CROs

If you always thought conversion rate optimization was only for number-nerds, I hope you’re convinced otherwise. Sure, there’s a lot of data that goes into the process, but there’s another side to the optimization game.

Several of our experts commented on the “other” side of data—meeting the needs of their best customers. The Eisenbergs went so far as to call this the “soul” of marketing.

[ The data simply helps them improve their ability to connect with those people—even to the point of delighting them.

Perhaps that’s why other tech or marketing centers aren’t measuring up to Austin. Here, you have the cultural diversity and creativity to bring data and people together.

Sure, Austin is a cluster of conversion optimizers. But here, they also have a life.

Mobile Web 1.0: Party Like It's 1999

Conversion-Centered DesignWe’ve come a long way, baby. This is one of my first web pages, from 1998.

Soft Reality Home Page from 1998. It was Web 1.0.

This was Web 1.0. Looking back, we have to cringe. But guess what: we’re in Mobile Web 1.0 and it feels like 1998 all over again.

In 1998, Web 1.0 was on the verge of becoming Web 2.0, which fueled a bubble that would bring stock markets down and rearrange the tech landscape.

Is Mobile Web 1.0 Like Web 1.0?

Mobile Web 1.0 may not have such a violent transition, but what we’re learning from testing mobile optimized websites is that we will look back at Mobile Web 1.0 and cringe, just like when we look at Web 1.0 sites.

What are we doing with the mobile web that are the equivalents of blinking text, myriad fonts and crazy background patterns?

Conversion Scientist Joel Harvey will attempt to answer some of those questions in his Conversion Conference presentation Mobile Optimization Essentials.

He’s going to reviewing several of the tests we’ve performed here at Conversion Sciences and let you in on how Mobile Web 2.0 is shaping up.

This won’t be some humdrum presentation either. Joel is one of the highest rated speakers at Conversion Conference, and he has the badge to prove it.

43 Reasons to Attend Conversion Conference

If Joel’s presentation wasn’t enough, you’ll learn from and meet speakers doing 43 sessions covering all aspects of online conversion.

And the list goes on and on. It’s a complete dose of getting more revenue from the audience you already have.

Save $100 with Code JOEL100

Because we have sway with the organizers of Conversion Conference, we can get you a sweet deal on the price of a ticket: $100 off.

Conversion Conference is just around the corner – only a month away. By mid-May, you’ll have a new perspective on how to make online marketing hum for your business.

And it’s in Las Vegas.

Tickets are going fast. Grab your ticket soon before they sell out. Don’t forget to use your discount code: JOEL100.

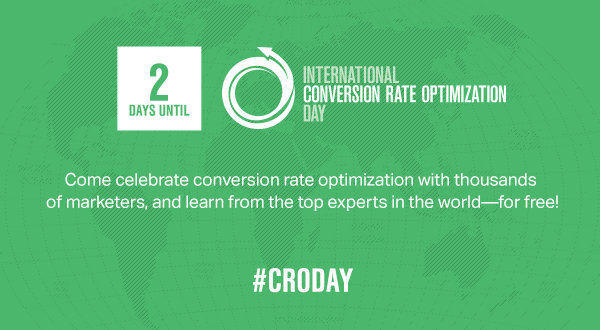

Austin Has The Highest Number of Website Optimizers per Capita

News & EventsLast year, Austin, Texas declared itself the Conversion Capital of the World with some stiff competition.

Clearly, the quality of breakfast tacos and microbrews are determining factors for how optimizers choose their city of residence.It turns out that, this year there is even more reason to support this very scientific and not-at-all-biased claim. We’ll be sharing our updated list of Austintatious website optimization pros on CRO Day, April 9.

Website optimizers aren’t your typical marketer. In our roundup, each one will tell the story of how they were drawn, pulled or tricked into conversion optimization.

Subscribe to The Conversion Scientist. Don’t miss this Ausome list.

Ironically, CRO Day was declared by the crew at Unbounce, a respected but decidedly “ain’t in Austin” company. Despite that, we think that you should be online for this event. We will participate.

breakfast tacos by goodiesfirst via Compfight cc and adapted for this post

High Converting Websites: What's the Secret Weapon?

News & EventsYou don’t have to do very many split tests before you find your secret weapon for high converting websites. I predict your secret weapon will be the same as ours.

Of course, if I reveal what it is, it won’t be a “secret” anymore, now will it. So, I’ll just drop a few clues and you can guess.

Now that you’re part of the inner circle, you have a golden opportunity to get better at deploying this secret weapon. Send us your questions now (right now) and listen in on CRO Day, April 9 as me and Joanna answer your questions on _________ (almost gave it away!) and other conversion optimzation issues.

You questions could address the two steps to making this work:

But you can Ask Us Anything about Conversion

RateOptimization (CRO).The truth is, if you don’t have your value proposition down — the words and images that define what you offer and why it’s good to have — you won’t have much luck testing forms, carts and such.

How This Online Service Makes Smart People Feel Stupid [CASE STUDY]

Conversion Marketing StrategyUpdated April 5, 2015

What could keep a highly motivated potential buyer from completing a transaction on your website? What could make a highly educated visitor feel like a bumbling child? Here’s one way.

I am a fan of the blubrry podcasting service. Founder Todd Cochrane hosted one of my favorite podcasts way back after the turn of the century. They do a good job of hosting The Conversion Sciences Podcast and offer good analytics on listeners.

So, when my account was cancelled due to a change in our PayPal account, I was keenly interested in restoring the account and my data. I mean, we’re talking about $12 a month here. No big deal.

What could possibly keep me from completing such a transaction? Well, I didn’t complete the resubscription process without leaving the site in confusion first. Wait until you find out why.

Early Optimization Success Comes From Embarrassing Places

It can be really hard to fend off a determined buyer. It can be really easy to confound a less-committed buyer.

Conversion optimization services promise sophisticated analysis and testing to make websites more effective. At Conversion Sciences, our Conversion Catalyst process is built on one simple assumption: We don’t know what will make an online business more money. We don’t know what changes will release hidden money.

We just have to be really good at finding out.

Our first discoveries inevitably come from two sources:

1. Technical problems on an untested device.

You don’t have just one website. You have 20 or 30 or more. Every device serves up a slightly different website. Every browser interprets your site in its own way.

The most reliable way to see how well your many websites are performing is with the actual systems. Our QAtion Station consists of computers, tablets and phones of varying vintages, from Windows XP computers running IE 6 to the latest iPhones.

We are in a unique position to see all of your websites. You’ll be surprised at some of the twisted “interpretations” these devices make of your site. We only check devices that make up a good-sized portion of your visitors. So, when we find an obscure bug, our clients make more money.

2. Things only a newbie to the site would find.

This is where our ignorance comes into play. We don’t need to be up on the best practices for conversion optimization, though we are. We just need to be human. It is one of our more charming characteristics.

You can’t see the problems you create for your visitors because you are too close to your business. Your website is a familiar place that is comfortable to you and to few others. We are thorough and know how to document problems so that they can be fixed or tested.

My blubrry experience is a case study in this last category.

Schedule a call with us and we’ll tell you what we can do for your online business. If you have five-hundred transactions or more a month, we can find the sales, leads and subscribers you’re missing every day.

How blubrry Made a Scientist Want to Cry

It took me a while to find the right place on the blubrry site to re-order service. The navigation menus weren’t labeled in the way I would label them. And I’m an experienced podcaster. I finally stumbled upon a link buried on a page that would allow me to repurchase my service.

Here’s the link in my blubrry account I was looking for.

Triumphantly, I clicked this link. This is what I got:

The blubrry checkout page doesn’t offer any help.

I was offered a choice of four amounts to pay, with no other information to guide my choice. All confidence drained from my body. Clearly I missed something. I’m a college graduate! I’m a trained web expert! And I cannot buy a subscription for a podcast hosting service? Self-loathing quickly followed.

Which would you choose. Is the cheapest enough?

Any bright individual would choose the cheapest. But then, I didn’t want to risk my content by being a cheapskate.

Ultimately, I left and went back to the emails informing me that my account had been cancelled. There were no links to this page nor any page offering solice.

I came back and, acting on a sliver of memory, chose the $12/month option because that was what I thought I was paying.

It was only when putting this column together that I found the page with the details I was missing. By clicking from my blubrry account page on “blubrry home” and then “blubrry store” I found this.

No matter which “Order Now” button you click, you are taken to the same confusing page.

Guess where “Order Now” takes you? Yep. The same obscure page listing four undefined prices.

The page of frustration cannot be defeated.

Of course, this could be fixed with a little logic that selected the proper level for the visitor and listed the name of the service level.

The point of this case study is this: conversion optimization gold may not be as far away as you think.

Blubrry is a Good Service, But…

I have already invested the time to make this service work for me. I’ve been a client for a couple of years. I’m committed. I did, however, check out competitor Libsyn to see if I should just jump ship. I didn’t.

Those less committed prospects who might be making a similar decision won’t be so forgiving.

The site is a user experience disaster, one of the hardest to use I’ve encountered. My account is not setup properly, but I’ve been able to make it work. I just found out that blubrry has options for advanced analytics. What a shame that I didn’t have this data sooner.

I suspect I am not the only one who has trouble with blubrry.com.

The site doesn’t need a redesign, but it needs some guiding elements on key pages. Only then can we begin to optimize for those subtle changes that make a site really convert.

Todd, please contact us and let us help you. It may be the most profitable call you make all year.

Any sites you think need a re-read? Let us know in the comments.

Update