Why Web Design for Conversion Needs Science

Web design is about communicating with people who have problems that your offering solves. Science is about making sure you’re not designing just for yourself. If you do web design for conversion, science will guarantee that your new design will perform better. Here’s how.

This is Alice. She’s a web designer. Alice is doing a website redesign. The design she completes is based on research and her extensive experience. She is confident that this new design will make more visitors choose her company.

Alice the Web Designer

Alice works with Bob, the web developer and Cindy, the marketing manager to finalize her design, copy and images.

Alice the web designer, Bob the web developer and Cindy the marketing manager.

Their boss, Doug, has faith in the team and thus the design. He loops in Emily from Sales. She thinks it is a big improvement.

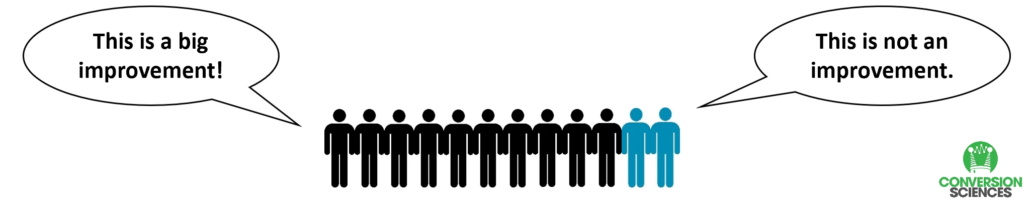

Yet, we haven’t asked any customers yet. So they invited some prospective customers in to weigh in on the new design.

Most of them thought the new design was a definite improvement.

The focus group was mostly in favor of the new design.

Frank, who worked in reception was concerned. He loved to shop online, and knew that if a product had a 5-star review but had only 12 reviews, he wouldn’t believe that rating.

So, why was 12 opinions enough to believe that this design is actually an improvement? Five of them were company employees, after all!

How 12 people can get it wrong.

Fortunately, Georgia, the company scientist was also concerned. She knew that the internal group was going to love the new design because they had designed it. And he knew that members of focus groups want to like the new design, because they are eager to please.

They’re human.

Instead of replacing the old design with the new design, Georgia setup an experiment. Half of the visitors to the website saw the old design and half saw the new design. Which one would generate the most revenue? What she found was that there was no difference. As far as the company’s prospective customers were concerned, there was no difference.

How did a talented bunch of people, with the help of real customers come to such an erroneous conclusion?

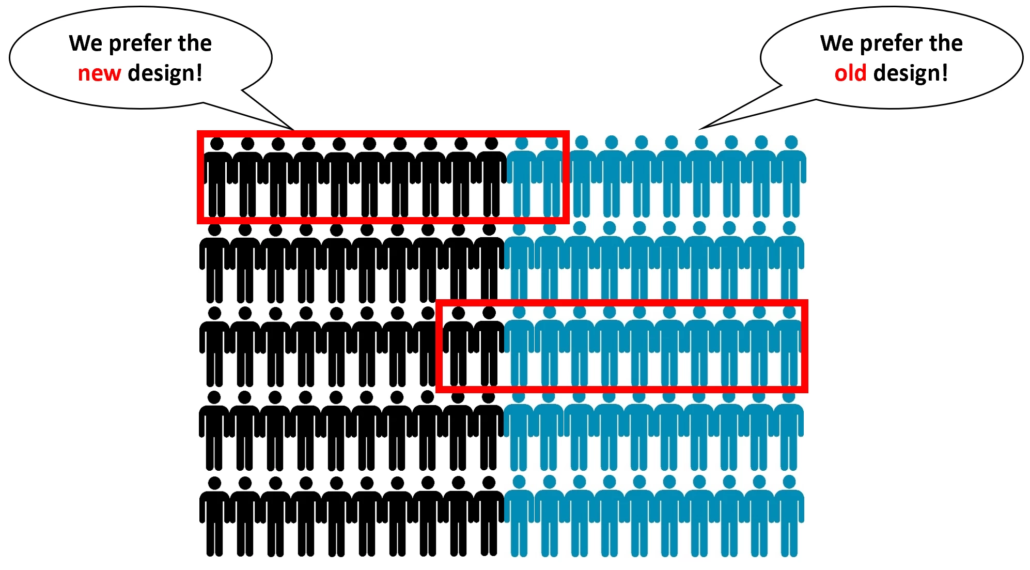

Let’s assume we have 100 people visiting a website. Half of them are more likely to buy with the old design and half of more likely to buy with the new design. You can see that it’s pretty easy to collect a sample of opinions that don’t tell you what is really going on. Pick the wrong 12 people, and you can either bet on a poor design, or throw out a pretty good design.

Most design teams are biased in favor of a design because they created it with no other opinions involved.

Bigger sample sizes mean fewer design mistakes.

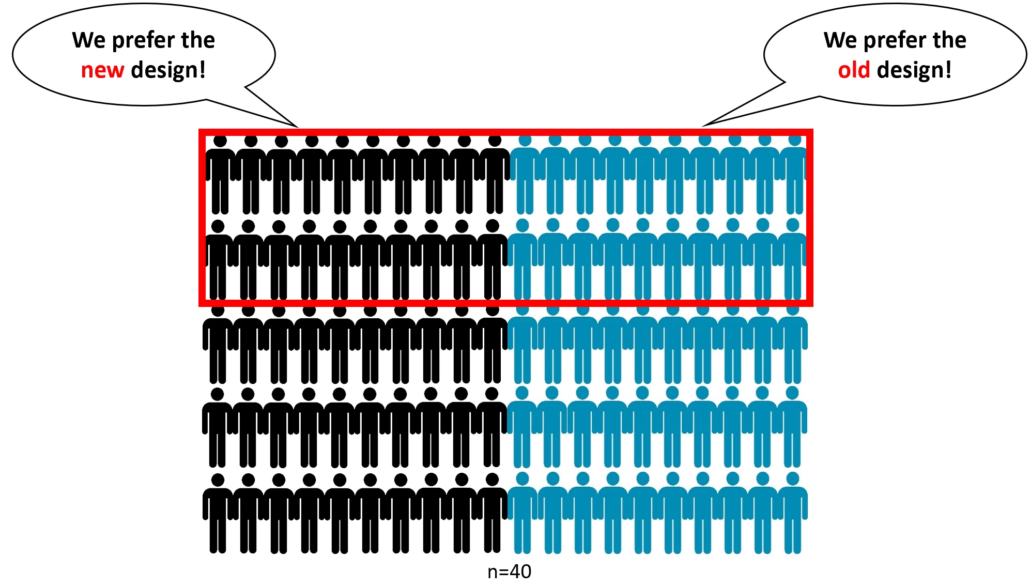

We can never ask EVERYONE if they prefer to see the old design or the new one. We have to ask a subset of the population of visitors. Scientists use the variable “n” for the size of the sample we are going to “ask”.

Here’s the problem from a Design Scientist’s point of view.

One designer? n=1

One designer, one developer, one marketing persion? n=3

Add in an executive and a focus group? n=12

At n=12, we are till making mistakes in our design decisions. How big does n need to be?

In our example, a sample size of n=40 gives us more confidence that we are seeing reality.

Where do we find these bigger sample sizes?

A design scientist has two tasks:

- Increase the sample of people opining on her designs.

- Increase the quality of the sample of people opining on her designs.

There are three broad ways of getting more n’s to look at your design options:

- AB Testing

- Trial and Error

- Usability Studies

Usability Testing

A usability test is essentially a giant focus group. Thanks to services like UsabilityHub, you can bring 25, 50, or more people to look at your new designs and tell you which communicates better.

Cons: These panels of people are NOT necessarily customers of your product, so their input is less reliable than trial and error or an AB test.

Trial and Error using analytics

To get the “input” of your actual prospects, it makes sense to launch something. Then you use analytics to see if the change made things worse or better. However, you must be willing to roll your new design back if you find that conversions drop with the new design.

We recommend using an AB testing tool to make changes to the page and then use analytics to determine if the change was an improvement. If it was, make the change permanent. If it wasn’t, try something else.

Cons: If there is a shift in traffic, pricing, promotions, competitors, or anything else, your results can be skewed. For example, if your competition launches a sales at the same time that you launch a new design, it can look like the new design is decreasing your sales.

AB Testing

The AB test is designed to overcome the limitations of the other two approaches. It takes it’s sample from your actual web visitors and it controls for changes in the marketplace. For an explanation of how this works see our intro to AB Testing.

Cons: AB testing is limited by the amount of traffic you are getting and requires some developer support.

Guaranteeing your design will outperform the old design.

If you are able to improve the number of brains involved in your web design for conversion, and can bring the brains of actual prospects, there is no reason you should make small sample size mistakes with your website redesigns.

You can guarantee your new design will improve conversions. If your samples say they’re not better, you don’t use them and try something else.

![Wasp-Redesign-Process_thumb[1] The Waspbarcode.com redesign process.](https://conversionsciences.com/wp-content/uploads/2015/05/Wasp-Redesign-Process_thumb1-180x180.gif)