Four Things You Can Do With an Inconclusive A/B Test

While it’s true we can learn important things from an “inconclusive” AB test, that doesn’t mean we like inconclusive tests. Inconclusive tests occur when you put two or three good options out for an AB test, drive traffic to these options and — meh — none of the choices is preferred by your visitors.

- Our visitors like the page the way it is (we call this page the “control”), and reject our changed pages.

- Our visitors don’t seem to care whether they get the control or the changed pages.

Basically, it means we tried to make things better for our visitor, and they found us wanting. Back to the drawing board.

Teenagers have a word for this. It is a sonic mix of indecision, ambivalence, condescension and that sound your finger makes when you pet a frog. It is less committal than a shrug, less positive than a “Yes,” less negative than a “No,” and is designed to prevent any decision whatsoever from being reached.

It comes out something like, “Meh” — a word so flaccid that it doesn’t even deserve any punctuation. A period would clearly be too conclusive.

If you’ve done any testing at all, you know your traffic can give you a collective “Meh” as well. We scientists call this an inconclusive test.

Whether you’re testing ad copy, landing pages, offers, or keywords, there is nothing that will deflate a conversion testing plan more than a series of inconclusive tests, especially if your optimization program is young.

Here are some things to consider in the face of an inconclusive test. Or, if you’d like immediate help from skilled Conversion Scientists™, schedule a free conversion consultation today.

1. Add Something Really Different To The Mix

Subtlety is not the split tester’s friend.Subtlety is not the split tester’s friend. Your audience may not care if your headline is in a 16-point or 18-point font. If you’re getting frequent inconclusive tests, one of two things is going on:

- You have a great “control” that is hard to beat, or

- You’re not stretching enough

Craft another treatment, something unexpected, and throw it into the mix. Consider a “well-crafted absurdity” as Groupon did in its early days. The idea is to make the call-to-action button really big, offering something you think your audience wouldn’t want.

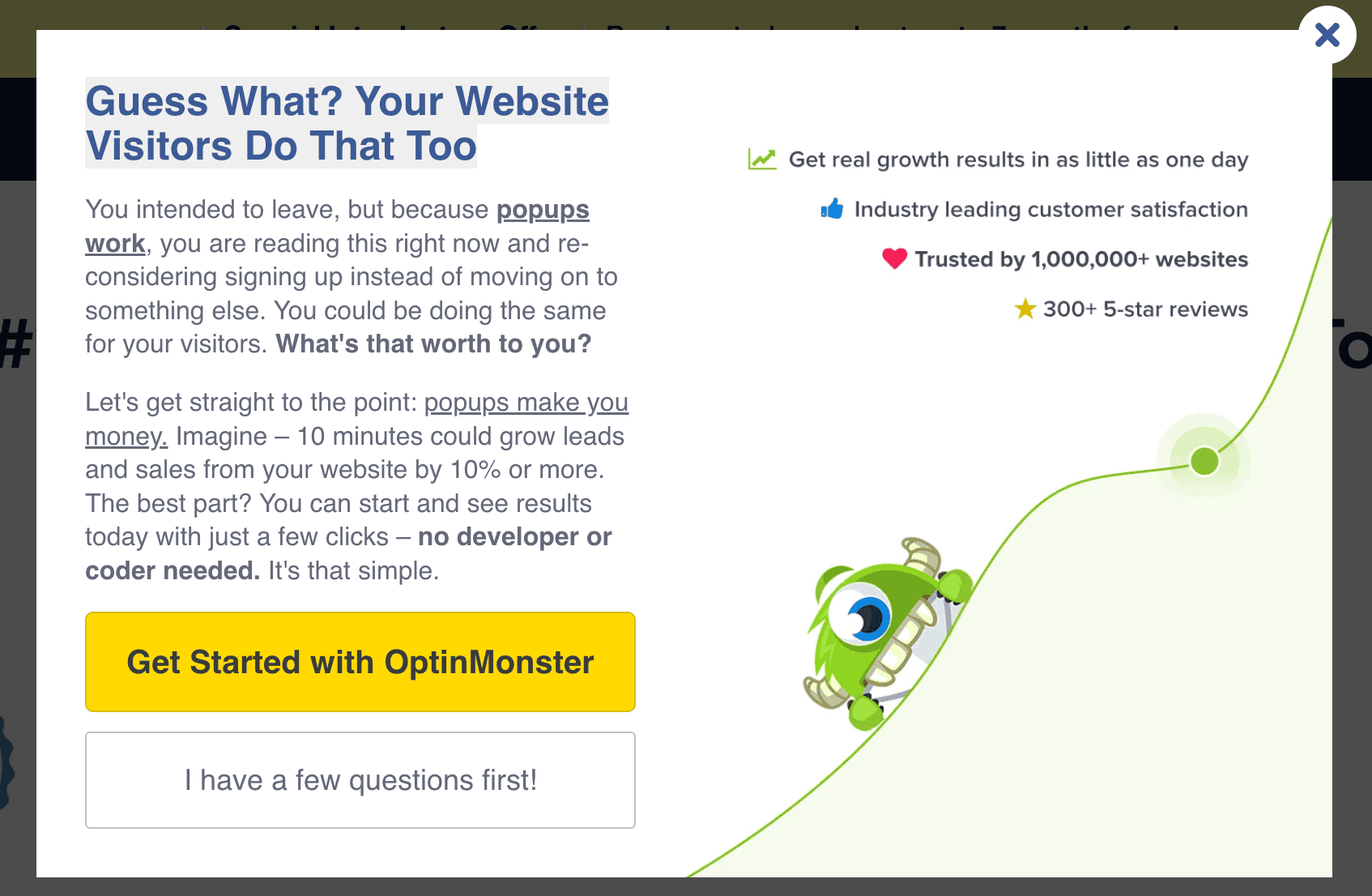

Or be a little edgy, addressing the unspoken reaction your visitor is likely having to your call to action, like OptinMonster does in this popup.

OptinMonster speaks directly to the visitor’s disdain for popups and transforms disinterest into interest.

2. Segment Your Test

We recently spent several weeks of preparation, a full day of shooting, and thousands of dollars on talent and equipment to capture some tightly controlled footage for video tests on an apparel site. This is the sort of test that is “too big to be inconclusive.” However, video is currently a very good bet for converting more search traffic.

- Ecommerce websites are seeing up to 80% increases in conversion rates from video marketing.

- 68% of marketers report video is delivering a higher return on investment than Google Ads.

- Explainer videos are showing conversion rate increases of up to 144%.

Despite these statistics, our initial results showed that the pages with video weren’t converting significantly higher than the pages without video. However, things changed when we looked at individual segments.

New visitors liked long videos, while returning visitors liked shorter ones. Subscribers converted at much higher rates when shown a video recipe with close-ups on the products. Visitors who entered on product pages converted for one kind of video while those coming in through the home page preferred another.

It became clear that, when lumped together, one segment’s behavior was cancelling out gains by other segments.

How can you dice up your traffic? How do different segments behave on your site?

Your analytics package can help you explore the different segments of your traffic. If you have buyer personas, target them with your ads and create a test just for them. Here are some ways to segment:

- New vs. Returning visitors

- Buyers vs. prospects

- Which page did they land on?

- Which product line did they visit?

- Mobile vs. computer

- Mac vs. Windows

- Members vs. non-members

3. Measure Beyond the Click

Here’s a news flash: We often see a drop in conversion rates for a treatment that has higher engagement. This may be counterintuitive. If people are spending more time on our site and clicking more — two definitions of “engagement” — then shouldn’t they find more reasons to act?

Apparently not. Higher engagement may mean that they are delaying. Higher engagement may mean that they aren’t finding what they are looking for. Higher engagement may mean that they are lost.

If you’re running your tests to increase engagement, you may be hurting your conversion rate. In this case, “Meh” may be a good thing.

In an email test we conducted for a major energy company, we wanted to know if a change in the subject line would impact sales of a smart home thermostat. Everything else about the emails and the landing pages were identical.

The two best-performing emails had very different subject lines but identical open rates and click-through rates. However, sales for one of the email treatments were significantly higher. The winning subject line had delivered the same number of clicks but had primed the visitors in some way, making them more likely to buy.

If you are measuring the success of your tests based on clicks, you may be missing the true results. Yes, it is often more difficult to measure through to purchase, subscription, or registration. However, it really does tell you which version of a test is delivering to the bottom line. Clicks are only predictive.

4. Print A T-shirt That Says, “My Control Is Unbeatable”

Ultimately, you may just have to live with your inconclusive tests.

Every test tells you something about your audience. If your audience didn’t care how big the product image was, you’ve learned that they may care more about changes in copy. If they don’t know the difference between 50% off or $15.00 off, test offers that aren’t price-oriented.

Make sure that the organization knows you’ve learned something, and celebrate the fact that you have an unbeatable control. Don’t let “Meh” slow your momentum. Keep plugging away until that unexpected test that gives you a big win.

Need help making your tests more conclusive? Explore our turnkey CRO services.

This post was originally published on March 1, 2016, and was adopted from an article that appeared on Search Engine Land. It has been updated with current research and examples.

Good stuff. I’ve passed this one around to help people feel more comfortable with risk-taking.

People should be more comfortable with risk-taking in situations like this where mitigating risk is everything.

Agreed! I’d rather have 10 things I know for sure won’t work in my toolbox than one sure-fire approach. We put offers in front of people every day. Eventually that button is going to get worn out.